Logicmojo - Jan 23, 2023

Logicmojo - Jan 23, 2023

Most users of the web are blissfully unaware of the sheer scale of the process responsible for bringing content across the Internet. There are literally miles of the Internet between you and the server you’re accessing.

To make things worse, sites that become extremely popular have to deal with consistent increases in monthly traffic, which can slow down the experience for everyone involved. Some sites can draw more visits in a month than comparable sites do in a year. Sites which bring in high-volume traffic may inadvertently be faced with frequent server upgrades, so that page speed and, in turn, usability, doesn’t suffer for their loyal customers. Often, however, simple hardware upgrades aren’t enough to handle the vast traffic that some sites draw.

So how do you ensure that your site won’t burst into figurative flames as page visits skyrocket? Consider employing a technique called load balancing.

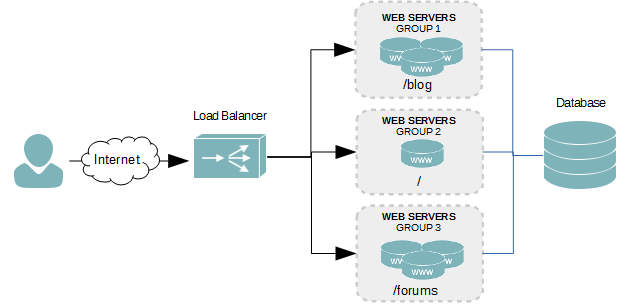

Load balancing refers to efficiently distributing incoming network traffic across a group of backend servers, also known as a server farm or server pool. It is a key component of highly-available infrastructures commonly used to improve the performance and reliability of web sites, applications, databases and other services by distributing the workload across multiple servers.

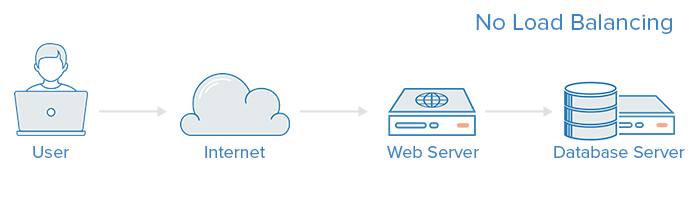

A web infrastructure with no load balancing might look something like the following:

In this example, the user connects directly to the web server, at some domain. If this single web server goes down, the user will no longer be able to access the website. In addition, if many users try to access the server simultaneously and it is unable to handle the load, they may experience slow load times or may be unable to connect at all.

So, What Load Balancing actually does ??

In more technical language, A load balancer acts as the “traffic cop” sitting in front of your servers and routing client requests across all servers capable of fulfilling those requests in a manner that maximizes speed and capacity utilization and ensures that no one server is overworked, which could degrade performance. If a single server goes down, the load balancer redirects traffic to the remaining online servers. When a new server is added to the server group, the load balancer automatically starts to send requests to it.

Main Points:-

• Distributes client requests or network load efficiently across multiple servers

• Ensures high availability and reliability by sending requests only to servers that are online

• Provides the flexibility to add or subtract servers as demand dictates

In nowadays, Load Balancer in every large scalable distributed system is now the necessity, form every video streaming platform to E-commerce platform you will saw Load Balancer in their system design . for eg. Facebook, Amazon, Airbnb, Netflix, Youtube, Google & etc. Load Balancing is now a critical part of system design nowadays you should How it works or runs.

What kind of traffic can load balancers handle?

Load balancer administrators create forwarding rules for four main types of traffic:

• HTTP — Standard HTTP balancing directs requests based on standard HTTP mechanisms. HTTP balancing is straightforward. The load balancer takes the request, uses the criteria set up by the network administrator to route the request to backend servers. The header information is modified according to the need of the application, so the backend server has the necessary information to process the request.

• HTTPS- HTTPS protocol is used for encrypted traffic. It follows the same methods as HTTP except for how it deals with the encryption. If the load balancers are using an SSL passthrough then they just let the traffic go to the backend server and let the decryption take place there. Load balancers with SSL termination decrypt the request and then passes the unencrypted request to the backend server.

• TCP — For applications that do not use HTTP or HTTPS, TCP traffic can also be balanced. For example, traffic to a database cluster could be spread across all of the servers.

• UDP — More recently, some load balancers have added support for load balancing core internet protocols like DNS and syslogd that use UDP.

Load Balancing Algorithms

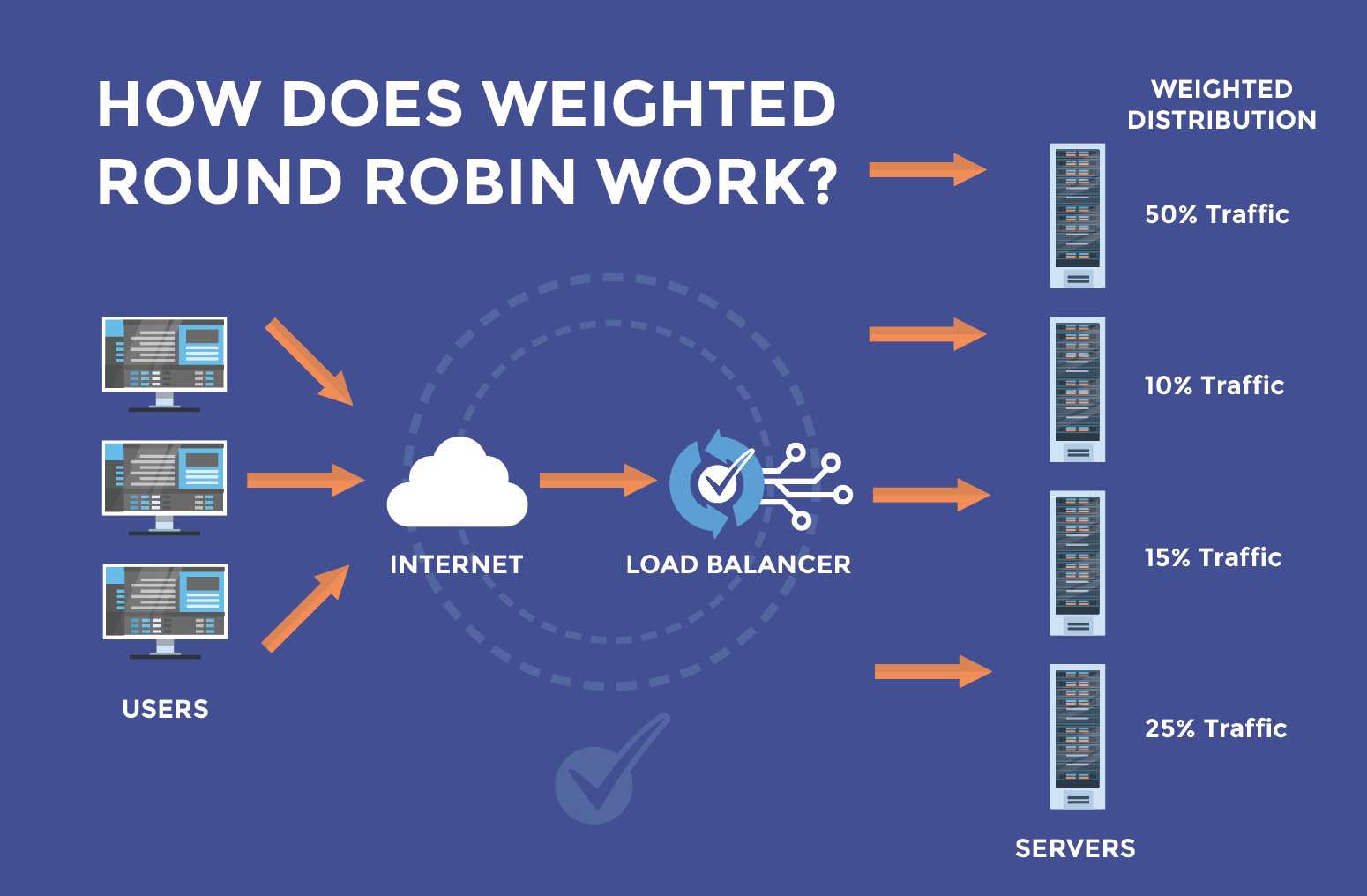

Different load balancing algorithms provide different benefits; the choice of load balancing method depends on your needs:

Round Robin — In this algorithm, the load balancer sends a request to a server and then moves on to the next one. It keeps following this process in a round

robin manner. It's a useful method if all your servers have similar processors and memories.

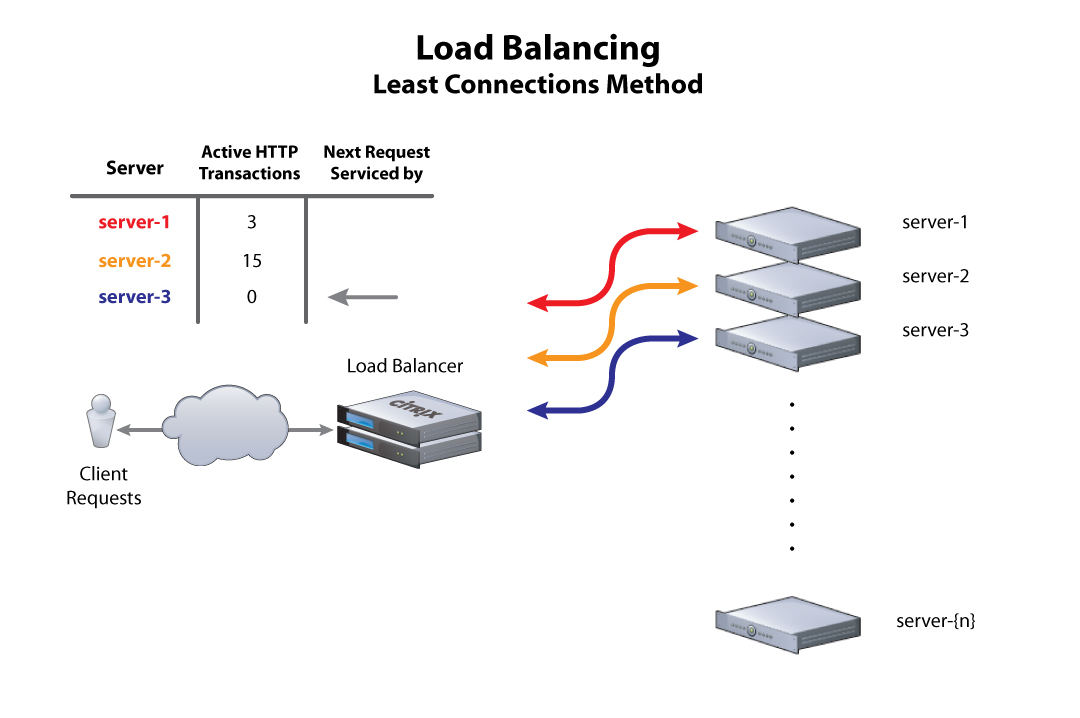

Least Connections — A new request is sent to the server with the fewest current connections to clients.

The relative computing capacity of each server is factored into determining which one has the least connections.

IP Hash — The IP address of the client is used to determine which server receives the request.

Hardware vs. Software Load Balancing

Load balancers typically come in two flavours: hardware‑based and software‑based. Vendors of hardware‑based solutions load proprietary software onto the machine they provide, which often uses specialized processors. To cope with increasing traffic on your website, you have to buy more or bigger machines from the vendor. Software solutions generally run on commodity hardware, making them less expensive and more flexible. You can install the software on the hardware of your choice or in cloud environments like AWS EC2.

How does the load balancer choose the backend server?

Load balancers choose which server to forward a request to based on a combination of two factors. They will first ensure that any server they can choose is actually responding appropriately to requests and then use a pre-configured rule to select from among that healthy pool.

Health Checks: Load balancers should only forward traffic to “healthy” backend servers. To monitor the health of a backend server, health checks regularly attempt to connect to backend servers using the protocol and port defined by the forwarding rules to ensure that servers are listening. If a server fails a health check, and therefore is unable to serve requests, it is automatically removed from the pool, and traffic will not be forwarded to it until it responds to the health checks again.

Load Balancing Algorithms: The load balancing algorithm that is used determines which of the healthy servers on the backend will be selected. A few of the commonly used algorithms are discussed above.

In this article, we’ve given an overview of load balancer concepts and how they work in general.

Good Luck happy learning!!