Logicmojo - Updated Jan 2, 2023

Logicmojo - Updated Jan 2, 2023

Numerous concepts, techniques, protocols, and tools, including Messaging, AMQP, RabbitMQ, Event-sourcing, gRPC, CQRS, and many others, are introduced to us as we

begin to explore microservices in 2023. Among all the other terminologies in 2023, the Apache Kafka was one that always grabbed attention and made us start studying it.

The issue is that people frequently talk about Kafka as though it were just another messaging system since they don't grasp what it actually is. You can implement that,

in fact, but it might be a waste of resources to use Kafka just for that.

A distributed system is a group of computers that operate as one computer for end users. They enable exponential scaling and can withstand billions of requests

and updates without experiencing any downtime. One of the most popular distributed systems on the market right now is Apache Kafka.

What is Apache Kafka?

As per the technical definition: “Kafka is a Distributed Streaming Platform or a Distributed Commit Log“

An implementation of a software bus employing stream processing is called Apache Kafka. It is a Java and Scala-based open-source software platform created by the Apache Software Foundation.

What exactly do those terms mean? Let's see one by one

Streaming Platform

Kafka maintains data as a continuous stream of records that can be handled in a variety of ways.

Commit Log

When you push data to Kafka, it takes those records and appends them to a stream of records, similar to appending logs in a log file or the WAL if you come from a database background. Any point in time can be used to "Replay" or read from this stream of data.

Benefits of Kafka

Here are a few advantages of Kafka:

• Kafka is distributed, partitioned, replicated, and fault-tolerant, which increases its reliability.

• Scalability: Apache Kafka's communications system scales without experiencing any downtime.

• Kafka employs a distributed commit log, therefore it is durable since messages persist as quickly as feasible on disc.

• Performance Both posting and subscribing messages with Kafka can be done quickly. Even when there are many TB of messages saved, it still operates consistently.

History of Apache Kafka

Prior to this, LinkedIn had trouble processing massive amounts of low-latency website data into a lambda architecture that could handle real-time events. Since there were no prior methods to address this issue, Apache Kafka was created in the year 2010 as a cure.

Nonetheless, there existed technologies for batch processing; however, the downstream users were informed of the deployment specifics of such technologies. Therefore, those technologies were insufficiently suited to Real-time Processing. Then, in 2011, Kafka became well-known.

Messaging

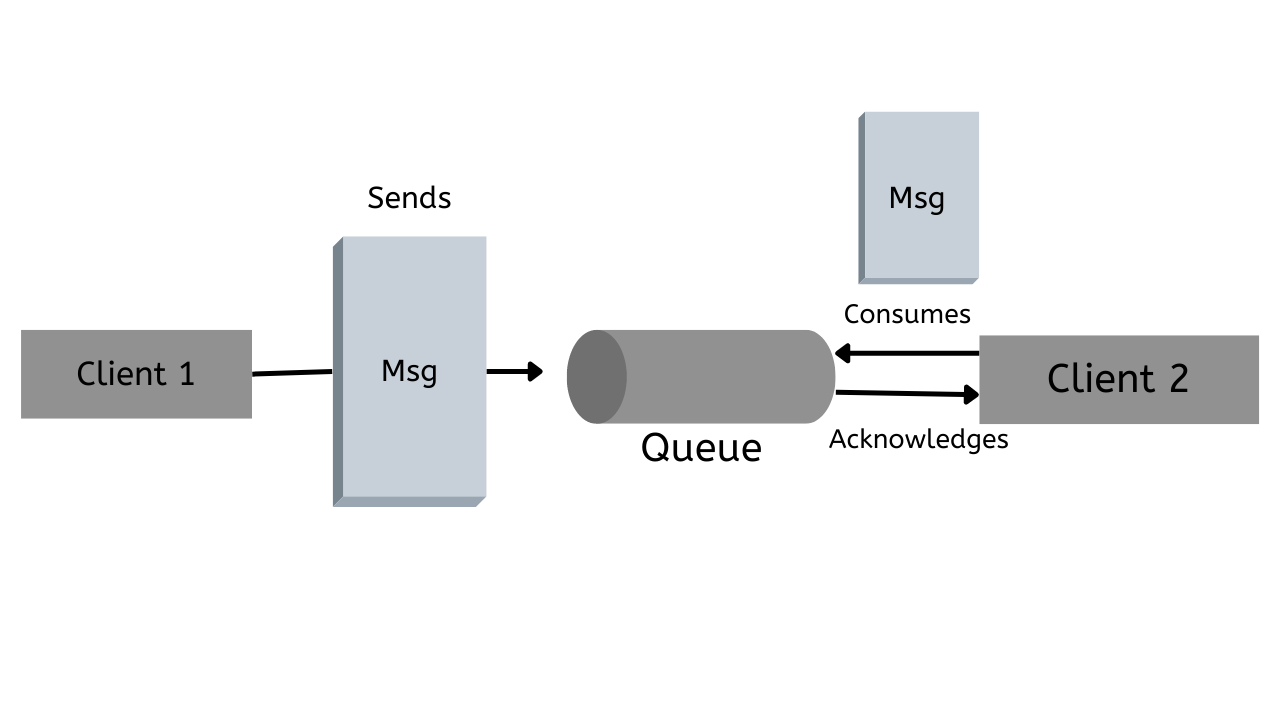

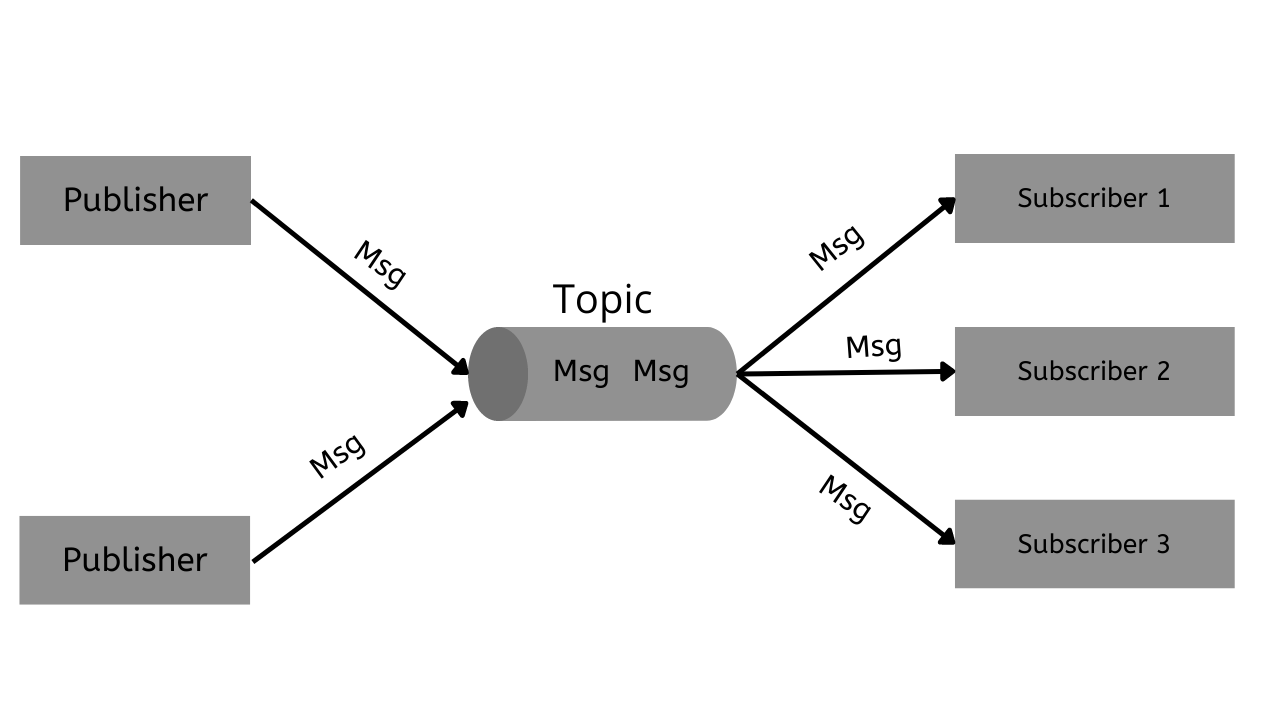

The act of conveying a message from one place to another is known as messaging. It features three important actors:

Producer: Whoever creates and sends the messages to one or more queues is the producer.

Queue: A buffer data structure that operates on a First-In, First-Out (FIFO) basis to receive messages from producers and send them to consumers. When a message is delivered, it is permanently gone from the queue and cannot be retrieved.

Consumer: A person who subscribes to a queue or queues and receives messages as they are published.

Key Components of Kafka

Kafka is a distributed system made up of various essential parts, including:

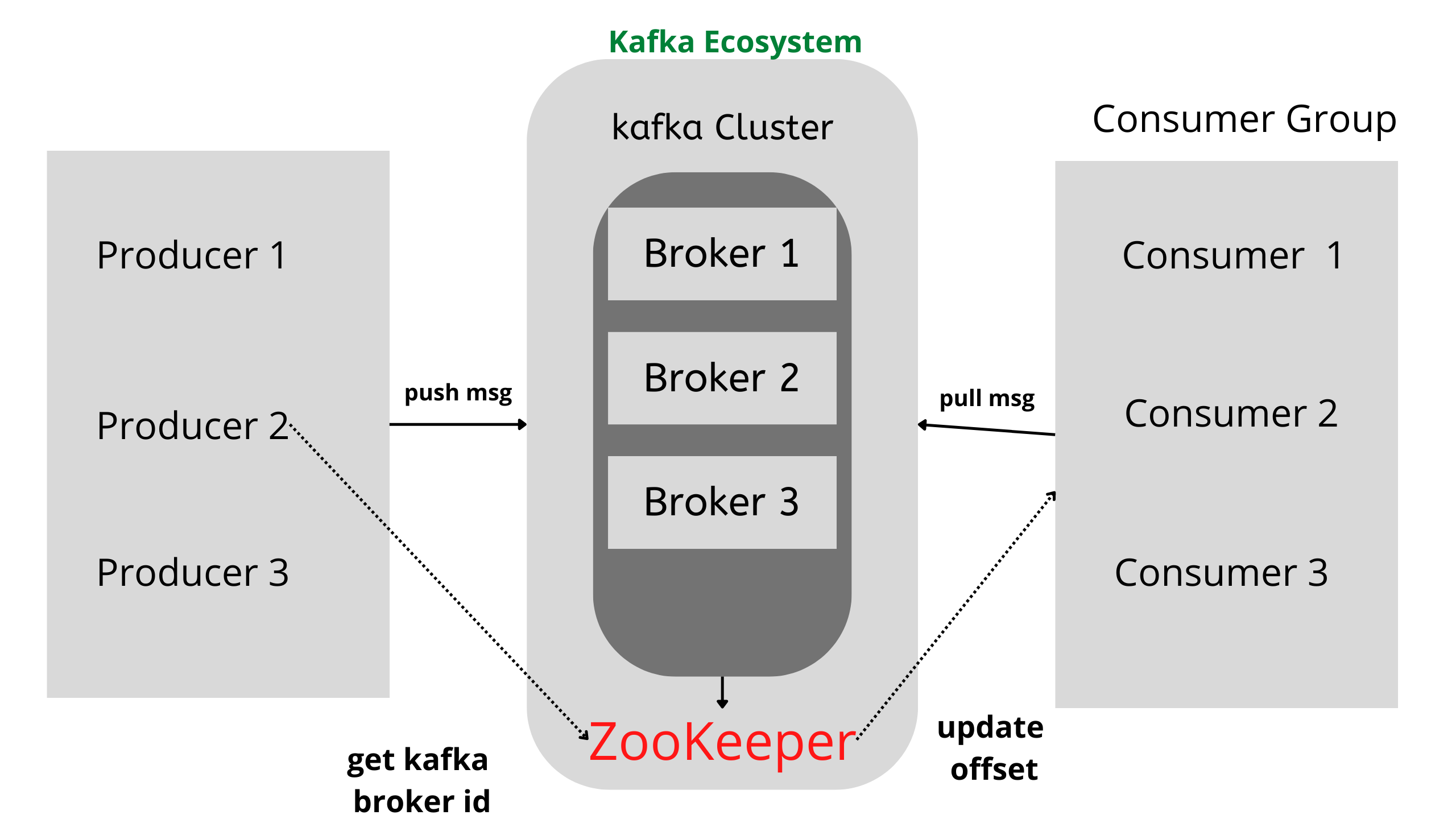

Broker nodes: In charge of the majority of I/O operations and persistent data storage inside the cluster. The cluster's topic partitions are stored in append-only log files, which are accommodated by brokers. For both horizontal scalability and greater durability, partitions can be replicated across many brokers; these are referred to as replicas. A broker node may take on the leadership role for some replicas while following other replicas. Additionally, the cluster controller, who oversees the internal administration of partition states, will be chosen as a single broker node. The leader-follower positions for every given division are also arbitrated in this.

ZooKeeper nodes: Kafka requires a mechanism for controlling the cluster's overall controller status in the background. There is a procedure in place to elect a new controller from the group of remaining brokers if the controller withdraws for any reason. In significant part, ZooKeeper implements the real mechanics of controller election, heart-beating, and other processes. As a kind of configuration store, ZooKeeper also keeps track of cluster metadata, leader-follower states, quotas, user data, ACLs, and other administrative elements. The total number of ZooKeeper nodes must be odd because of the consensus and gossiping process that it uses.

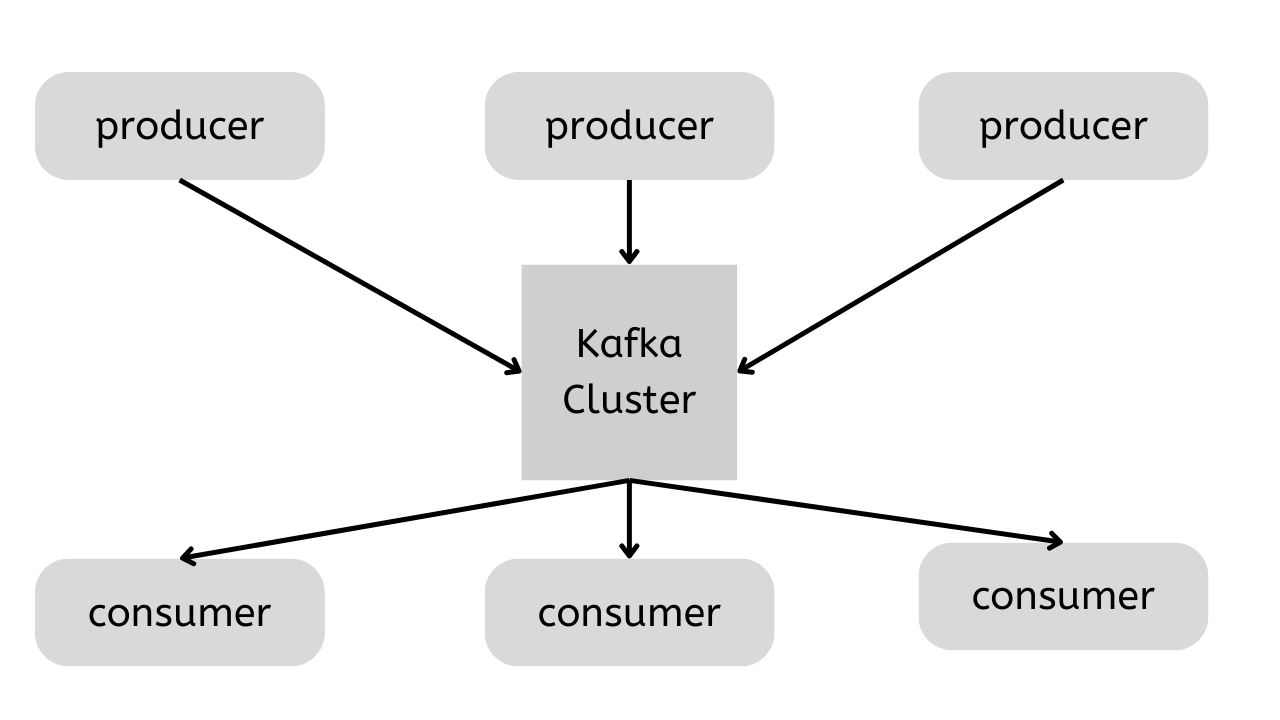

Producers: Client programmes in charge of adding data to Kafka topics. Since Kafka is log-structured and allows topics to be shared across several consumer ecosystems, only producers have access to change the information in the underlying log files. On behalf of the producer clients, the broker nodes do the actual I/O. A topic may be published to by any number of producers, each of whom may choose the partitions on which the records will be stored.

Client programmes that read from subjects are consumers. Any number of consumers may read from the same topic, but there are rules dictating how records are distributed among the consumers depending on how they are arranged and grouped.

How Does It Work?

Other applications (consumers) process the data while some apps (producers) send records (messages) to a Kafka node (broker). Customers who subscribe to the topic will receive updates and the previously specified messages will be saved there.

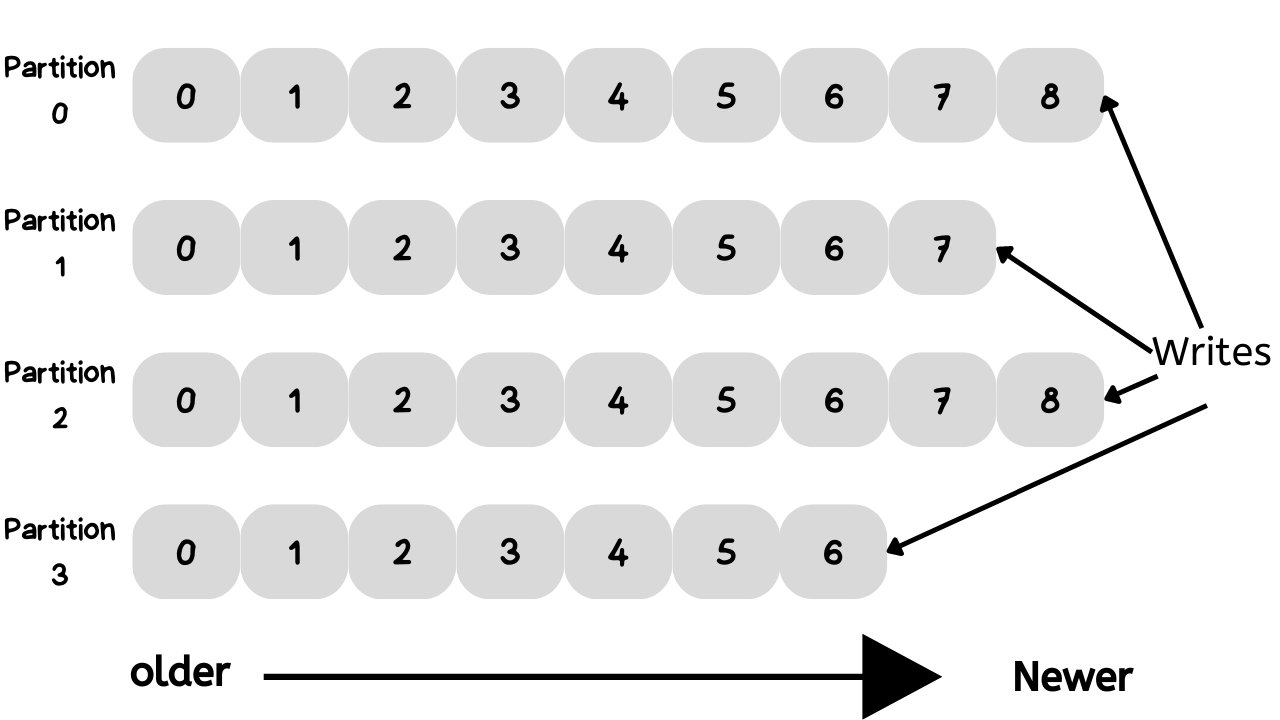

Because they have a tendency to grow very large, topics are broken down into smaller components for better performance and scalability. (An example would be if you were keeping track of user login requests. You could separate them according to the first character of the user's username.)

Kafka makes sure that every message in a partition is organised according to the order in which it was received. By examining a message's offset, which can be compared to a conventional array index, you may identify it. Each additional message in a partition causes this offset, a sequence number, to rise.

Kafka operates under the tenet of the ignorant broker and astute consumer. As a result, Kafka doesn't maintain track of the records that users read before deleting them. Instead, it holds them until a certain size threshold is reached or for a predetermined period of time (such as one day). Customers ask Kafka for new messages and let him know which records they wish to see. This gives them the ability to replay and process events again by adjusting the offset they are currently at as necessary.

The fact that consumers are essentially consumer groups with one or more consumer processes inside is important to note. To avoid repeated message reading, each partition is connected to just one consumer process per group.

Why is Zookeeper necessary for Apache Kafka?

One of Kafka's main dependencies is the zookeeper. It is a necessary part of the Kafka installation process.

ZooKeeper is used in distributed systems as a naming registry and for service synchronisation. ZooKeeper is mostly used when working with Apache Kafka to keep tabs on the health of Kafka cluster nodes and to keep a list of Kafka topics and messages.

There are five main purposes for ZooKeeper. ZooKeeper is specifically used for quotas, cluster membership, topic configuration, access control lists, and controller election.

Election of the controller: The controller is the broker in charge of preserving the leader-to-follower relationship between all partitions. When a node shuts down, ZooKeeper makes sure that other replicas step in to lead partitions in place of the node that is shutting down.

Membership in the Cluster: ZooKeeper maintains a list of all active brokers in the cluster.

Topic Configuration: ZooKeeper keeps track of all topic configuration information, such as the list of active topics, the number of partitions for each topic, the location of the replicas, topic configuration overrides, and the preferred leader node.

ACLs: ZooKeeper additionally keeps track of all topics' access control lists (ACLs). This provides a list of consumer groups, individuals who belong to the groups, and

the most recent offset each consumer group has received from each partition. It also indicates who or what is permitted to read to and write to each topic.

ZooKeeper controls the amount of data that each client is permitted to read and write.

When to Use Kafka

As we've already discussed, Kafka enables you to store a significant volume of messages without worrying about issues like performance or data loss.

This makes it ideal for use as the centre node of your system's design, serving as a centralised conduit for tying various applications together. Kafka may serve as the centrepiece of an event-driven architecture and enables true application decoupling.

You may easily disconnect communication between various (micro)services using Kafka. Writing business logic to augment Kafka topic data for service consumption is now simpler than ever thanks to the Streams API. The opportunities are endless, thus I strongly advise you to look into how businesses are utilising Kafka.

You may easily disconnect communication between various (micro)services using Kafka. Writing business logic to augment Kafka topic data for service consumption is now simpler than ever thanks to the Streams API. The opportunities are endless, thus I strongly advise you to look into how businesses are utilising Kafka.

Messaging System in Kafka

To transfer data from one programme to another, use the messaging system. Applications can thus focus solely on data without worrying about how to deliver data. The foundation of distributed messaging is a dependable message queuing mechanism. Despite this, messages are asynchronously queued by the messaging system and client applications. The publish-subscribe (pub-sub) messaging system and the point-to-point (p2p) messaging system are the two different types of communications patterns. However, the most typical communications format is pub-sub.

Point to Point Messaging System: In this case, messages are stored in a queue. Even if one or more consumers can consume the messages in the queue, each message can only be consumed by a maximum of one consumer at a time. Additionally, it ensures that a message in the queue is removed as soon as a consumer reads it.

Publish-Subscribe Messaging System: The messages in this subject are persistent. Kafka In this approach, users can subscribe to one or more topics and read every communication inside those subjects. Furthermore, message recipients are subscribers, whereas message authors recommend publishers.

Write Data to Apache Kafka

Using Topics, Apache Kafka stores data. Every Topic has a unique name. Brokers keep Topics in storage.

Topics are divided into divisions. Therefore, a Topic is a structure made up of Partitions. Actually, information can be written to any Topics division. The number of Partitions for each Topic can be decided.

The Log idea is actually applied in partition. Consequently, new data is continuously added to the back. A beginning or middle of the partition cannot be added. Data is written according to the Partition's instructions. Old to new is in chronological order. Written information cannot be edited again. The information in the division is not kept here indefinitely. There are two storage setups for Kafka.

Time-based retention (e.g. 7 days)

Data size based storage (e.g. 100 GB

Use Cases

There are many methods to use Kafka, but the following are some examples of various use cases that have been posted on the official Kafka website:

⮞ Real-time processing of financial transactions

⮞ laying the groundwork for event-driven architectures, microservices, and data platforms

⮞ Kafka can be used throughout an enterprise as a log aggregation solution to gather logs from many systems and make them available in a common format to various consumers.

⮞ Recording consumer interactions and responding to them

⮞ Metrics Kafka is frequently used to monitor operational data. Statistical data from remote applications are combined in this process to create consolidated feeds of operational data.

Apache Kafka Tutorial - Installation Steps

Java Installation

The device should have Java installed, which you can verify by writing a command. If not then download latest version of JDK

$ java -version

Typically, downloaded files are kept in the downloads folder; use the following commands to confirm this and extract the tar setup.

$ cd /go/to/download/path

$ tar -zxf jdk-8u60-linux-x64.gz

Move the extracted java content to the usr/local/java/ subdirectory to make java accessible to all users.

$ su password: (type password of root user) $ mkdir /opt/jdk $ mv jdk-1.8.0_60 /opt/jdk/

Add the following commands to your.bashrc file to set the path and JAVA HOME variables.

export JAVA_HOME =/usr/jdk/jdk-1.8.0_60 export PATH=$PATH:$JAVA_HOME/bin

Download Kafka

Install Kafka on your machine by going to apache website and download the latest version of it

Using command below extract the tar files

$ cd opt/ $ tar -zxf kafka_2.11.0.9.0.0 tar.gz $ cd kafka_2.11.0.9.0.0

Start the server using below command

$ bin/kafka-server-start.sh config/server.properties

$ bin/kafka-server-start.sh config/server.properties [2016-01-02 15:37:30,410] INFO KafkaConfig values: request.timeout.ms = 30000 log.roll.hours = 168 inter.broker.protocol.version = 0.9.0.X log.preallocate = false security.inter.broker.protocol = PLAINTEXT ……………………………………………. …………………………………………….

Good luck and happy learning!