Introduction

Artificial Intelligence is a technology that empowers machines to simulate human intelligence and problem-solving capabilities. These machines are programmed to work, think, learn, and act like humans. A neural network is a powerful technique of AI that processes data similarly to a human brain, the process works by using neurons in a layered structure representing the way biological neurons work together to conclude.

In simple terms, artificial neural networks consist of artificial neurons which are interconnected nodes, these neurons receive the input, process it, and provide the output which might be input for the next layer. With neural networks machines can make intelligent decisions with minimal human intervention.

What is Artificial Neural Network?

The concept of an artificial neural network is derived from the biological neural network of the human brain. Just like the biological neurons are interconnected to each other the artificial neural networks are also interconnected to each other. This structure is designed to process information for solving problems and recognizing patterns similar to how the human brain works.

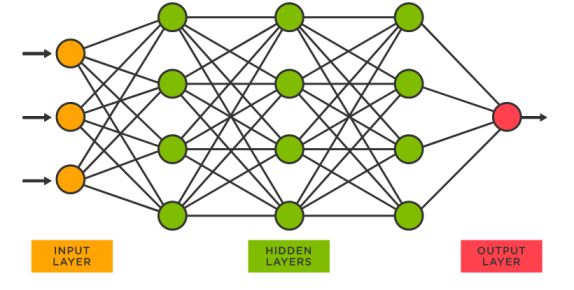

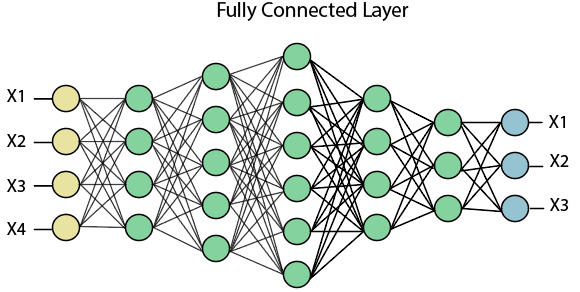

A basic neural network architecture consists of interconnected nodes to extract features from data and make predictions. A node layer of an artificial neural network consists of an input layer, hidden layers, and an output layer. The hidden layer may contain hundreds of layers with interconnected nodes to process information in a human-like manner.

To understand ANN think of a doctor who works together to identify the patient's disease. each doctor receives test results, makes a decision based on the test result, and passes it to the next doctor. This process continues until they identify the diease patient is suffering from. here the output(patient disease) depends on the input (test result). Unlike the human brain where the output to the input keeps changing because of the "learning" process of the brain.

Key Concepts in Neural Networks:

- Interconnected neurons that process information

- Layered architecture mimicking brain structure

- Ability to learn from data and improve over time

- Pattern recognition capabilities

- Can handle complex non-linear relationships

- Adaptive learning through experience

Crack Your Next Tech Interview with Confidence!

Join LogicMojo and master Data Structures, Algorithms, System Design and more.

Learn MoreThe Architecture of a Neural Network

A vast number of artificial neurons, also known as units, are placed in a hierarchy that consists of an input layer, three or more hidden layers, and an output layer. The hidden layer may contain hundreds of layers, these multiple layers allow feature extraction for large, unstructured, and unlabelled data sets.

Input Layer

Accepts input from the outside environment. This is where the neural network receives the data it needs to process.

Hidden Layer

Processes information received through multiple layers of neurons. This is where the complex computations happen.

Output Layer

Final results are conveyed through an output layer. This layer provides the answer or prediction.

Example:

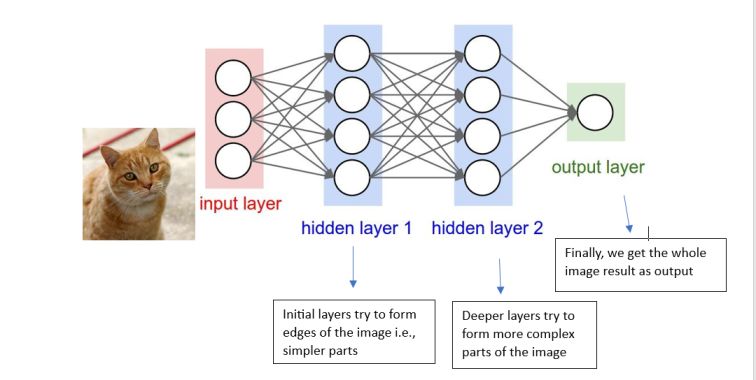

Let us understand the architecture of a neural network with an example of recognizing an animal in an image.

Input layer:

- Receives input consisting of an image of a cat or dog

Hidden Layer:

- Layer 1 (Detector):

- Detect edges such as body line and tail shape

- Detect fur textures

- Layer 2 (Classifier):

- Classifies animal features such as pointy ears, mouth, whiskers, etc.

- Classifies animal as a cat or dog.

Output Layer:

- Generate output based on the classification: "The animal in the images is a dog".

The artificial neural network keeps learning from data and improves its prediction over time like how the human brain learns from experience and improves decision-making.

The input layer is the first layer of the artificial neural network which receives the information from external sources, the input layer processes the information and releases it to the hidden layer which is the second layer. There may be multiple hidden layers, where each neuron receives the input from the previous layer computes the weighted sum, and releases it to the next neuron in the next hidden layer, the output from the previously hidden layer is the input to the next hidden layer or the final output layer.

Biological Neurons and Artificial Neural Networks

.jpg)

The concept of artificial neural networks is derived from biological neurons found in the human brain. A biological neuron has dendrites to receive signals (impulses) cell body or soma to process signals, and an axon to pass them to other neurons, synapses enable the transmission of signals between neurons. Similarly, the artificial neuron receives signals from the input layer, the hidden layer processes the signals and the final layer provides the output using the activation function, synapses represent the weight to determine the strength between two artificial neurons.

| Biological Neuron | Artificial Neuron |

|---|---|

| Nervous System | Artificial Neural Network |

| Dendrite | Inputs |

| Cell body or soma | Nodes |

| Axon | Outputs |

| Synapses | Weights |

| Impulses | Data |

Types Of Artificial Neural Networks

Based on the flow of data ANNs are categorized into different types, some are listed below:

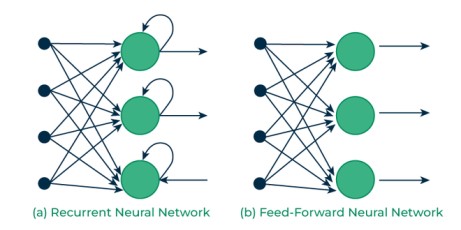

FeedForward Neural Network (FNN)

In this neural network, the information flows in one direction that is from the input node to the output node. Every node in the input layer is connected to every node in the next layer which is the first hidden layer and every node from the hidden layer is connected to every node in the output layer. They assist in the creation, recognition, and classification of patterns.

Recurrent Neural Network (RNN)

Recurrent neural networks process data sequentially to create loops within their network, the output from the previous time step is fed as an input for the next time step. Similar to a feed-forward network, this neural network begins with front propagation and then remembers all processed data so it can repeat it in the future. When a network makes an inaccurate prediction, the system self-learns and keeps trying to make the right forecast through backpropagation. Natural Language Processing and time series prediction conversions typically employ this kind of ANN.

Convolutional Neural Networks (CNN)

One of the most often utilized models is Convolutional neural networks. This computational neural network model makes use of a multilayer perceptron variant and has one or more convolutional layers that can either be completely linked or pooled. These convolutional layers extract features of the input data and pass the obtained results in the form of output to the next layer. The CNN model has been employed in many of the most cutting-edge AI applications, including facial recognition, text digitization, and natural language processing.

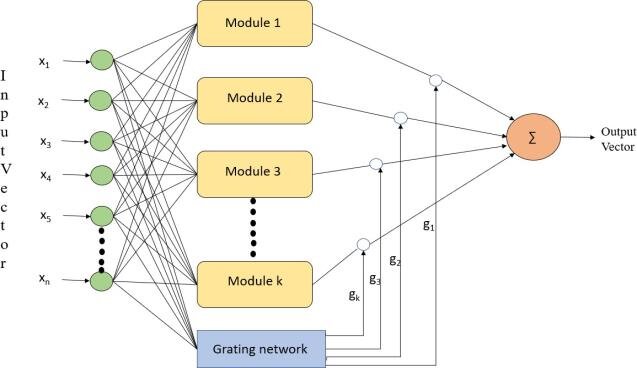

Modular Neural Networks

Modular Neural Networks consist of multiple neural networks that operate independently of one another, these modules work independently but they are integrated within a single network to achieve goals. Throughout the calculation process, the networks are not in contact with one another or interfering with one another's operations. As a result, complicated or extensive computing procedures can be carried out more effectively.

How Artificial Neural Networks are trained?

Neural networks are trained by processing large sets of labeled or unlabeled data to perform different tasks. These learned data can be used to process unknown inputs accurately. Data scientists used the supervised learning method by providing the labeled data sets with the right answers in advance, allowing neural networks to build knowledge from these data sets and train for further processing.

Training Process Example:

For example: To recognize an image of a cat, a neural network is trained by providing numerous cat images. Once the neural network learns by using cat images, the network is fed with the different images and asked to classify whether the images are of cats or not. The output is verified by using a human-provided description, if the result is far from the correct answer then the backpropagation method is used to adjust the internal parameters of a neural network to minimize the errors.

Forward Propagation

In the forward propagation method input data is passed through a neural network to predict the outcome.

Backward Propagation

In the backward propagation method the errors are calculated by examining the difference between the predicted value and the expected value. The errors are propagated backward to update the weight and the bias value to minimize errors.

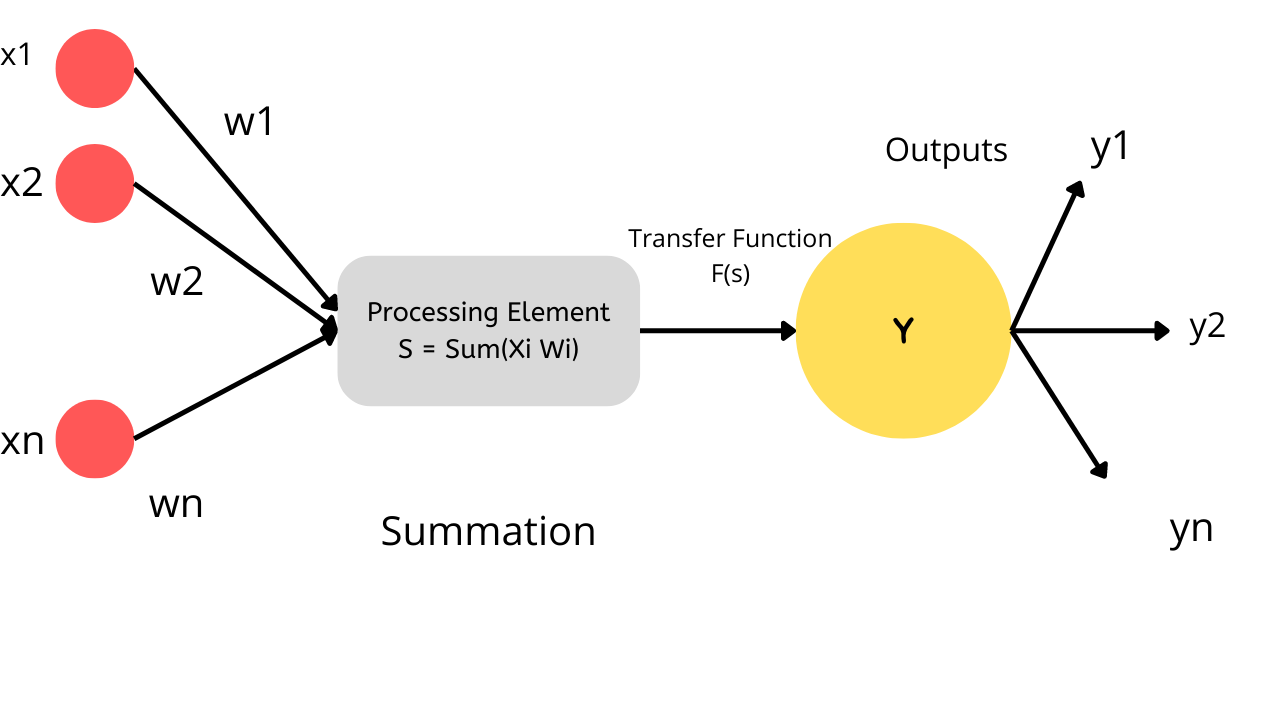

How Does A Neural Network Work?

Artificial neural networks represent the human brain, they process the input data received from external sources and provide the output based on input. The artificial neurons process the input received and pass the output to the next layer where each neuron has an input, weights, bias, and activation function:

Input

Each neuron processes the input received and passes the output to the next layer, the received output can be input to the next layer or the final output layer.

Weights

Weights are a parameter initialized randomly to adjust the strengths of neurons. The weight can be adjusted during training to minimize the difference between the predicted value and the expected value.

Bias

Bias is the additional parameters that are added with the weighted sum of input to better fit the data.

Activation function

A mathematical function applied to the weighted sum of inputs and bias to receive the desired output.

The inputs (x) from the input layer are multiplied by the weights (w) that are allocated to them. The weighted sum is created by adding the multiplied values. If the weighted sums result in zero then a bias value is added to make it non-zero. The weights inside the artificial neural network are modified after each iteration to minimize errors. Each node has a weight and threshold that go along with it. Any node whose output exceeds the defined threshold value is activated and provides data to the network's uppermost layer. The activation function is applied to the weighted sum of input to model the complex relationship between input and output.

Mathematical Representation

The weighted sum of the input is calculated as: (Y=W1X1+W2X2+b)

Some of the commonly used activation functions are:

- Binary: The output is either 1 or 0

- Tanh: The output is between the range of -1 and 1

- ReLU: The output is either positive or zero

- Sigmoid: The output is in the range of 0 and 1

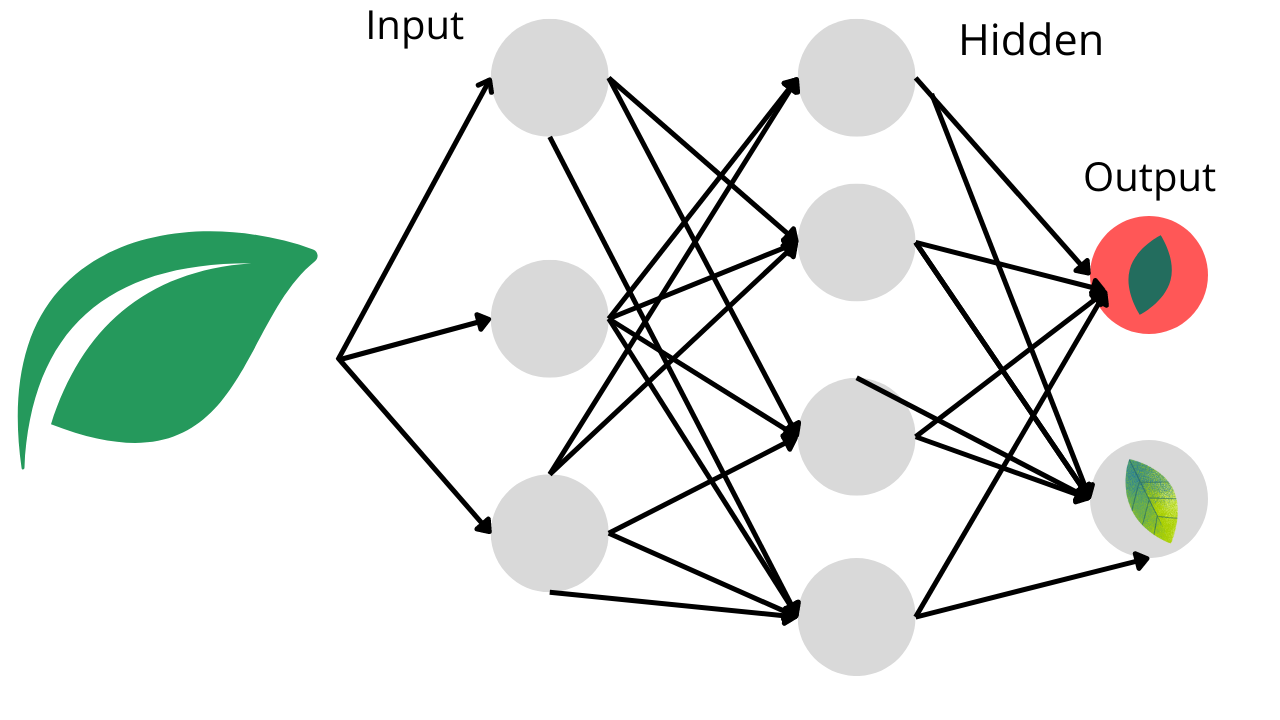

Explanation with Example

Imagine you are tasked with creating an Artificial Neural Network (ANN) that divides photographs into two categories:

Class A: Contains pictures of healthy leaves.

Class B: Featuring pictures of ill-looking leaves

So how do you build a neural network that separates crops with illness from those that don't?

The first step in any process is to prepare the input for processing by converting it. According to the image's dimension in our scenario, each leaf image will be divided into pixels.

For instance, suppose the image has 900 pixels overall and is made up of 30 by 30 pixels. The input layer of the neural network receives these pixels' representations as matrices.

An artificial neural network (ANN) has perceptrons that accept inputs and process them by sending them from the input layer to the hidden layer, and then the output layer, just like our brains have neurons that assist in structuring and connecting thoughts.

Each input is given a starting random weight as it is transmitted from the input layer to the concealed layer. Following this, the inputs are multiplied by the relevant weights, and the sum is supplied as input to the following hidden layer.

Each perceptron in this situation has a bias value assigned to it, which corresponds to the weighting of each input. Additionally, each perceptron undergoes an activation or transformation function that decides whether or not it will be activated.

Data transmission to the following layer takes place via an active perceptron. The data is transmitted in this way via the neural network until the perceptrons get to the output layer (forward propagation).

The output layer determines whether the data belongs to class A or class B by deriving a probability.

Application Of Artificial Neural Networks

Artificial neural networks have numerous real-world applications across various domains and industries some are outlined below:

Natural Language Processing

NLP enables computers to understand and process human language meaningfully. The neural network helps machines understand and gather insight from the user's provided text and documents. It is used in speech recognition, chatbots, and various applications with human-computer interaction.

Computer Vision

Artificial Intelligence provides a technology that allows systems to extract meaningful information by analyzing and interpreting visual information such as digital images, videos, and other visual data. For example, the Google search engine uses a convolution neural network to search for images similar to those provided by users.

Speech Recognition

A neural network is capable of understanding human speech despite its language, pitch, and tone and converting it into written form. For example, virtual assistants like Alexa and Siri use artificial neural networks to understand spoken language by converting it into text.

Healthcare

Artificial neural networks play a significant role in healthcare where neural networks can detect tumors in the human body by analyzing medical images accurately and providing assistance to proper diagnosis.

Finance

In finance neural networks are used for analyzing vast amounts of transactions for fraud detection and predicting stock by using historical data and trends.

Transportation

Autonomous vehicles, route optimization, and traffic prediction rely on AI and neural networks to process data through sensors and cameras.

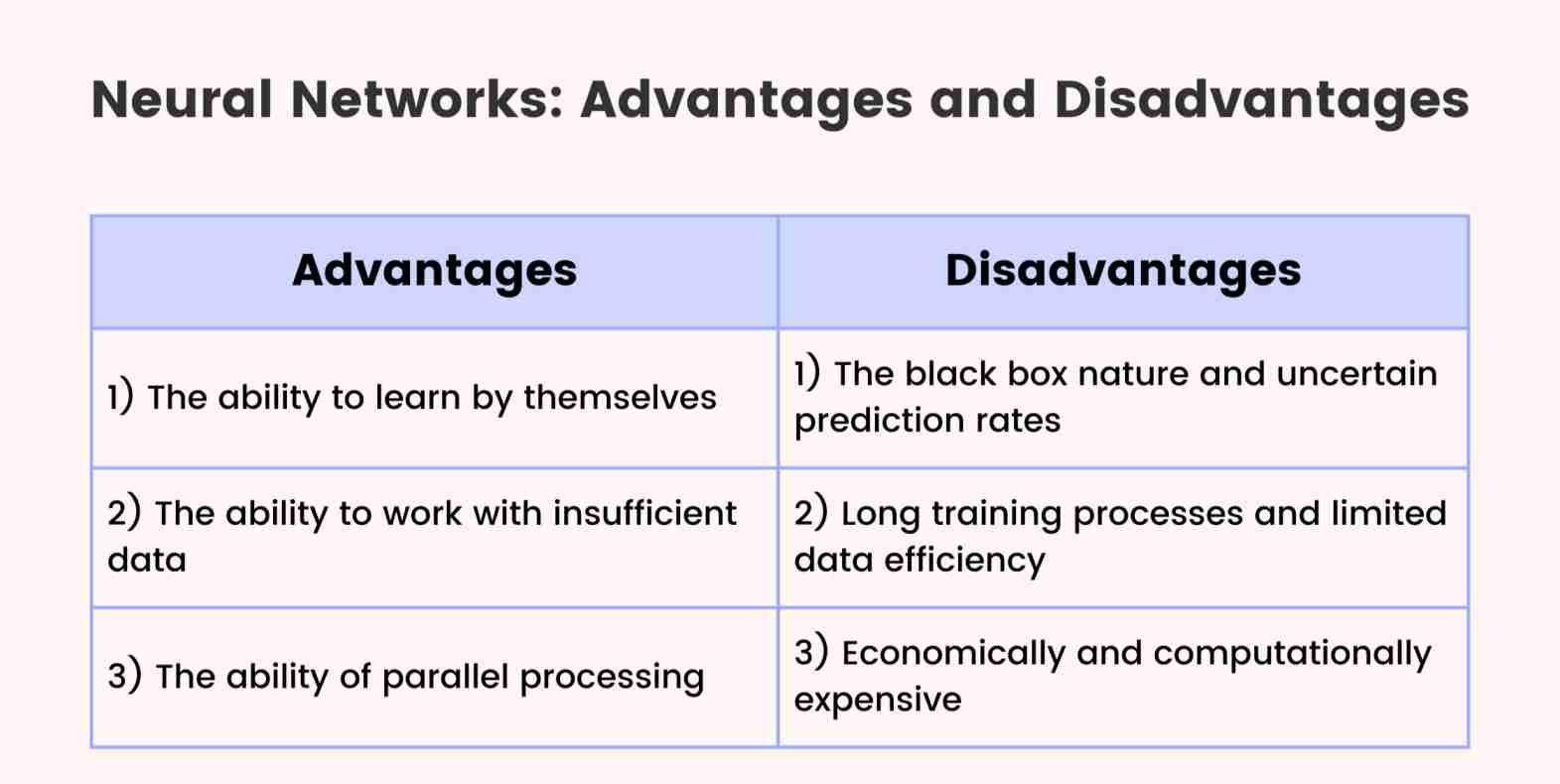

Advantages and Disadvantage Of Artificial Neural Network (ANNs)

Advantages

- ANNs learn from the provided information and improve performance over time.

- ANNs can learn and extract relevant features from the provided data.

- With parallel processing capacity, the network can handle multiple tasks at a time.

- ANNs can simulate the real-world linkages between input and output by learning and modeling complicated, nonlinear relationships.

- With fault tolerance and robustness, ANNs can handle noisy data and function well even if one or more cells are corrupted or missing.

- ANNs can forecast the results of unseen data due to their capacity to generalize from learned patterns.

- ANNs are highly versatile they can be applied across various domains such as healthcare, market, finance, etc.

Disadvantages

- Artificial neural network systems can perpetuate biases from the training dataset, leading to unfair outcomes and making existing biases worse.

- Training artificial neural networks requires signification time and computational power.

- ANNs require large amounts of data to ensure accuracy which might not always be available.

- Since the network relies on numerical data to function, all problems must first be converted into numerical values before being provided to the ANN.

- The black-box nature of ANN makes it challenging to understand how they arrived at a specific solution.

Deep Learning Vs Neural Networks

It can be misleading because the terms "deep learning" and "neural networks" are frequently used interchangeably in speech. It's important to remember that the "deep" in deep learning just denotes the number of layers in a neural network. A neural network with more than three layers, including the inputs and outputs, is referred to as a "deep learning algorithm". A simple neural network is one that just contains two or three layers.

Key Differences:

Neural Networks

- Can have few layers (2-3)

- Used for simpler pattern recognition

- May require more feature engineering

Deep Learning

- Contains many layers (3+)

- Can learn more complex patterns

- Automatic feature extraction

Conclusion

AI is a versatile platform capable of understanding, detecting, and resolving problems. When blended with other technologies, artificial neural networks can automate many industrial processes and extensive applications, from virtual assistance to autonomous cars. With consistent research and development to overcome obstacles, AI and neural networks have the potential to contribute to some of humanity's pressing challenges.

Good Luck & Happy Learning!!

Ready to Master AI and Machine Learning?

Join LogicMojo's comprehensive courses and take your tech career to the next level.

Explore Courses