You must succeed in a demanding interview procedure if you want to work in data science. Be ready to respond to numerous machine learning interview questions since many top businesses have three or more rounds.

Candidates are rigorously evaluated during the machine learning interview for their awareness of ML systems, real-world applications, and product-specific requirements in addition to

their knowledge of fundamental concepts.

Understanding what is anticipated in the interview is essential if you are seeking for a job in machine learning. Therefore, we have compiled the top machine learning interview questions

to aid in your preparation. We'll start with some fundamental inquiries before moving on to more complex ones.

Machine learning Interview Questions and Answers

⌚ What is Machine learning?

Artificial intelligence forms "machine learning" works with system programming and automates data analysis to let computers learn from their experiences and act accordingly without having to be explicitly programmed.

Robots, for instance, are programmed to carry out tasks depending on information they gather through sensors. They automatically develop new programmes based on data, and they get better over time.

⌚ What are different types of machine learning algorithms?

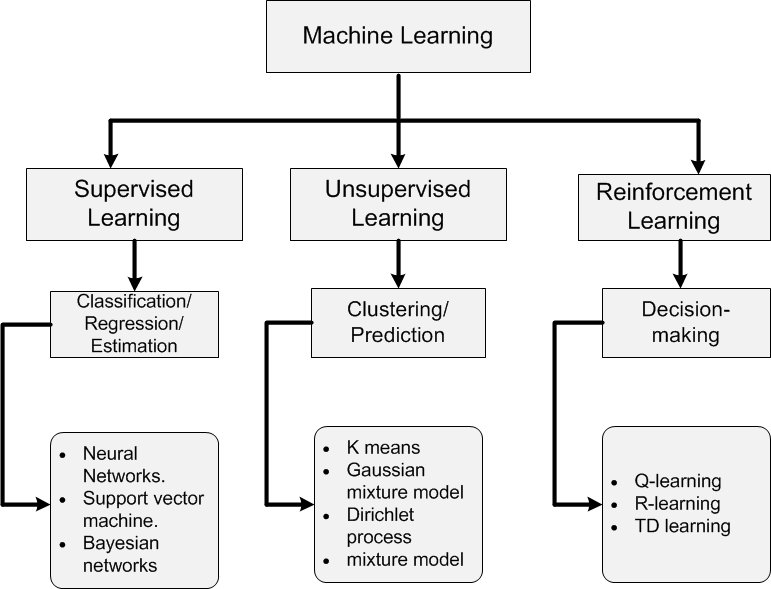

There are mainly three types of machine learning algorithms

Supervised Learning

In supervised machine learning, predictions or choices are made by a model using historical or labelled data. Data sets that have been given tags or labels and so become more meaningful are referred to as labelled data.

Unsupervised Learning

Without labelled data, unsupervised learning is impossible. A model can spot trends, oddities, and connections in the supplied data.

Reinforcement learning

The model can learn depending on the rewards it earned for its prior action using reinforcement learning. Think about the setting in which an agent operates. A goal is set for the agent to accomplish. Positive reinforcement is given to the agent each time it acts in some way toward the aim. Additionally, the agent receives unfavourable feedback if the action taken deviates from the objective.

⌚ What does "precision and recall" mean to you?

Here's an analogy to help me explain:

Imagine one case, Machine Learning Interview Questions of precision can be understand with this analogy. Your girlfriend has been surprising you for your birthday. "Sweetie, do you remember all the birthday surprises from me?" your girlfriend

queries you one day.

You must recall each of the ten occurrences from your memory in order to maintain excellent relations with your partner. Recall is therefore the proportion of events you can reliably recall to all of the events.

Your recollection ratio is 1.0 (100 percent) if you can accurately recall all 10 events. If you can only accurately recall 7 events, your recall ratio is 0.7. (70 percent )

However, some of your responses may be incorrect.

Consider, for illustration, that you made 15 estimates, 10 of which were accurate and 5 inaccurate. You may remember everything that happened, but not with absolute clarity.

The proportion of events you can recall properly to all the occurrences you can remember is called precision (mix of correct and wrong recalls).

You get a 100 percent recall from the scenario above (10 true occurrences, 15 answers (10 correct, 5 incorrect), but your precision is just 66.67 percent (10 / 15).

⌚ What do you mean by Supervised Learning?

A machine learning approach called supervised learning uses labelled training data to infer a function. A collection of training examples make up the training data.

Using the person's height and weight, one may determine their gender. The well-known supervised learning algorithms are listed below.

Support Vector Machines

Decision Trees

Regression

Naive Bayes

K-nearest Neighbour Algorithm and Neural Networks.

⌚ What do you mean by Unsupervised Learning?

Another kind of machine learning technique used to identify patterns in the provided collection of data is unsupervised learning. We can't anticipate anything in this because there is no dependent variable or label. Algorithms for Unsupervised Learning:

Clustering,

Anomaly Detection,

Neural Networks and Latent Variable Models.

⌚ What Are the Three Steps in the Machine Learning Model Building Process?

Building a machine learning model involves the following three steps:

Building a model

Select an appropriate algorithm for the model, then train it to meet the requirements.

Model Validation

The test data can be used to determine the model's correctness.

Use of the Model

After testing, make the necessary adjustments and apply the final model to real-world tasks.

Here, it's crucial to keep in mind that the model needs to be periodically tested to make sure it's operating properly. To ensure that it is current, it should be changed.

⌚ What do you mean by deep learning?

Deep learning is a kind of machine learning that uses artificial neural networks to create systems that think and learn like people. The fact that neural networks can have multiple layers is where the name "deep" derives from.

The manual process of feature engineering in machine learning is one of the key distinctions between it and deep learning. In the case of deep learning, the neural network model will choose the appropriate features on its own (and which not to use).

⌚ What do you mean by Semi-supervised Machine Learning?

Unsupervised learning lacks any training data, whereas supervised learning makes use of fully labelled data.

In the case of semi-supervised learning, the training data is made up primarily of unlabeled data and only a tiny portion of it is tagged.

⌚ What do you mean by cross validation?

The process of cross-validation divides all of your data into three categories: training, testing, and validation data. The model was trained on datasets from k-1 of the k

subsets of the data.

Tests are conducted on the final subset. This is carried out for every subset. K-fold cross-validation is used here. The final score is created by averaging the results

of all the k-folds.

⌚ What do you mean by Bias in Machine learning?

Data bias indicates that the data are inconsistent. There are a number of non-exclusive causes for the consistency, and they include the following.

For instance, a tech company like Amazon built a hiring engine that will take 100 resumes and spit out the top five candidates, who will then be hired.

The programme was modified to eliminate this bias after the corporation found it was not delivering results that were gender-neutral.

⌚Give a clear example to illustrate false negative, false positive, true negative, and true positive.

Let's think about a fire emergency scenario:

True Positive: If a fire occurs and the alarm sounds.

Fire is a good thing, and the system's forecast came true.

False Positive: When the fire alarm sounds but there isn't any fire.

The system incorrectly anticipated that there would be fire, hence the forecast is false.

False Negative: If there is a fire but no alarm sounds.

Since there was fire, the system's prediction that it would be negative was incorrect.

True Negative: If there was no fire and the alarm doesn't go off.

This forecast came true, and the fire is negative.

⌚ Distinguish between classification and Regression?

Sorting data into distinct categories and producing separate outputs are both examples of classification.

Putting emails into categories for spam and non-spam, for instance. Regression, on the other hand, works with continuous data. For instance, forecasting stock prices at a specific moment.

In order to categorise the output, classification is utilised. For instance, would it be warm or cold tomorrow?

Regression, on the other hand, is used to foretell the relationship that the data illustrates. What will the weather be like tomorrow, for instance?

⌚ What is SVM?

One of the most well-liked supervised learning algorithms, Support Vector Machine, or SVM, is used to solve Classification and Regression problems. However, it is largely employed in Machine Learning Classification issues.

The SVM algorithm's objective is to establish the best line or decision boundary that can divide n-dimensional space into classes, allowing us to quickly classify fresh data points in the future. A hyperplane is the name given to this optimal decision boundary.

SVM selects the extreme vectors and points that aid in the creation of the hyperplane. Support vectors, which are used to represent these extreme instances, form the basis for the SVM method. Consider the diagram below, where a decision boundary or hyperplane is used to categorise two distinct categories:

Linear SVM: Linear SVM is used for data that can be divided into two classes using a single straight line. This type of data is called linearly separable data, and the classifier employed is known as a Linear SVM classifier.

Non-linear SVM: Non-Linear SVM is used for non-linearly separated data. If a dataset cannot be classified using a straight line, it is considered non-linear data, and the classifier employed is referred to as a Non-linear SVM classifier.

⌚What are the several kernels in SVM?

SVM uses six different kinds of kernels:

Linear kernel: When data can be separated linearly, a linear kernel is utilised.

Polynomial kernel: When discrete data lacks a natural notion of smoothness, use a polynomial kernel.

Radial basis kernel: A decision boundary made using a radial basis kernel is substantially more effective at distinguishing two classes than one made using a linear kernel.

Sigmoid kernel: A neural network's activation function is the sigmoid kernel.

⌚ What do you mean by clustering in Machine Learning?

Unsupervised learning methods like clustering require putting data points in groups. A collection of data points can be used using the clustering technique. You can use this method to group all of the data points into their appropriate categories. While the data points belonging to different groups have diverse features and properties, those belonging to the same category share comparable features and properties. By using this technique, statistical data analysis can be done.

K-means clustering: When there is no clear group or category in the data, K-means clustering is frequently applied. K-means clustering enables you to uncover the data's hidden patterns, which may then be utilised to divide the data into different categories. The number of groups into which the data is divided is represented by the variable k, and the data points are clustered based on how similar their attributes are. Here, new data is labelled using the centroids of the clusters.

DBSCAN: Density-based spatial grouping of noise-containing applications This density-based clustering algorithm resembles mean-shift clustering in its approach. The number of clusters does not need to be predetermined, however unlike mean-shift clustering, DBSCAN recognises outliers and considers them as noise.

⌚ How Should Missing or Invalid Data Be Handled in a Dataset?

Dropping certain rows or columns or completely replacing them with a different value is one of the simplest ways to deal with missing or corrupted data.

In Pandas, there are two practical methods:

Find the columns/rows with missing data and drop them with the use of IsNull() and dropna().

Fillna() will insert a placeholder value in place of the incorrect values.

⌚ What distinguishes machine learning from deep learning?

Deep Learning: Deep Learning makes it possible for machines to use artificial neural networks to make a variety of business-related decisions, which is one of the reasons why it requires a huge quantity of training data. Deep Learning requires high-end computers as well because it requires a lot of computational power. With the help of the provided data, the systems acquire a variety of traits and features, and the issue is resolved end-to-end.

Machine learning: Using the insight obtained from historical data, machine learning enables machines to make business decisions independently of any outside assistance. Most features must be explicitly coded and understood beforehand, while machine learning systems only need a minimal amount of data to train themselves. A particular business problem is divided into two parts and then solved separately via machine learning. Both solutions are mixed once they have been obtained.

⌚ What do you mean by entropy in Machine learning?

Entropy is a machine learning concept that quantifies the randomness of input data. It gets more challenging to draw any meaningful conclusions from the data the more entropy there is in the data. Take the flipping of a coin as an illustration. This action does not favour heads or tails, hence the outcome is random. There is no clear correlation between the action of flipping and the potential outcomes in this situation, making it difficult to predict the outcome for any number of tosses.

⌚ What is Overfitting in Machine learning?

In machine learning, overfitting occurs when a statistical model explains random error or noise rather than the underlying relationship. When a model is very sophisticated, overfitting is frequently seen. It occurs as a result of having an excessive number of parameters governing the variety of training data types. The model performs poorly despite being overfitted.

In machine learning, overfitting occurs when a statistical model explains random error or noise rather than the underlying relationship. When a model is very sophisticated, overfitting is frequently seen. It occurs as a result of having an excessive number of parameters governing the variety of training data types. The model performs poorly despite being overfitted.

⌚ What should be done to prevent overfitting?

Overfitting occurs when a model tries to learn from a small dataset. Overfitting can be prevented by using a lot of data. However, if we must create a model from scratch since we only have a tiny database, we can employ a process called cross-validation. In this strategy, a model is often given two datasets: one with known data for training and the other with unknown data for testing. Cross-main validation's goal is to provide a dataset that will "test" the model during its training phase. "Isotonic Regression" is used to avoid overfitting if there is enough data.

⌚ How to Handle Outlier Values in Machine learning?

An observation that differs significantly from other observations in the dataset is referred to as an outlier. Tools for finding outliers include

To deal with outliers, we often need to employ three straightforward strategies:

We may discard them.

They can be classified as outliers and added as features.

To lessen the impact of the outlier, we can also alter the feature.

⌚ What do you mean by Content-Based filtering and Collaborative filtering?

For tailored content recommendations, collaborative filtering is a tried-and-true method. A sort of recommendation system called collaborative filtering anticipates fresh content by tying user interests to the preferences of many other users.

Content-based filtering: Recommender systems that are content-based are solely concerned with the preferences of the user. Using similar content and the user's prior selections, new recommendations are sent to the user.

⌚ There are now several machine learning algorithms. How can one decide which algorithm to employ for that given a data set?

The type of data in a given dataset solely determines which machine learning method should be utilised. We utilise linear regression if the data are linear. When non-linearity is evident in the data, the bagging technique performs better. Decision trees or SVM can be used to evaluate and understand data for business objectives. The accuracy of the answer would be improved by using neural networks if the dataset includes photos, videos, and audios.

Therefore, choosing an algorithm to apply to a particular situation or set of data cannot be determined by a single metric. To develop the optimal fit method, we must investigate the data using exploratory data analysis (EDA) and comprehend why the dataset is being used. Therefore, it is crucial to thoroughly research all of the algorithms.

⌚ What do you mean by PCA in machine learning?

In the real world, multidimensional data are at work. As dimensions rise, data visualisation and computing become more difficult. In this case, it could be necessary to minimise the size of the data in order to easily analyse and visualise it. To accomplish this,

getting rid of unnecessary dimensions

just keeping the most pertinent dimensions

Principal Component Analysis (PCA) will work in this situation.

Finding a new set of orthogonal, uncorrelated dimensions and ranking them according to variance are the objectives of PCA.

⌚ Explain Confusion Matrix?

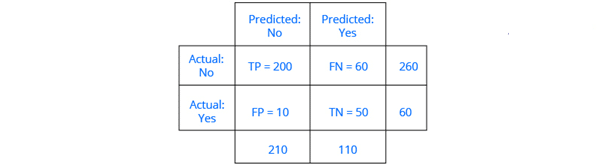

A model's performance is explained using a confusion matrix, which also provides a summary of the predictions for the classification issues. It helps to highlight the disparity in knowledge between classes.

The confusion matrix provides the number of correct and erroneous values as well as the categories of errors. Reliability of the model:

As an illustration, consider the confusion matrix below. For a classification model, it consists of values such as true positive, true negative, false positive, and false negative. Now, the following formulas can be used to assess the model's accuracy:

According to the model's True Positive, True Negative, False Positive, and False Negative values, the accuracy is 0.78.

⌚ Explain why are Validation and Test Datasets required?

When building a model, data is divided into three groups:

Training dataset: A model's variables are adjusted and built using the training dataset. The accuracy of the model created using the training dataset cannot be depended

upon because it may produce false results when given additional inputs.

Validation dataset: A model's response is examined using the validation dataset. The hyperparameters are then tweaked based on the data from the estimated benchmark of the validation dataset. A model is indirectly trained with the validation set when the response of the model is assessed using the validation dataset. This could result in the model being overfit to a particular set of data. Therefore, this model won't be robust enough to provide the appropriate response to data from the real world.

Test dataset: The portion of the larger dataset that has not yet been utilised to train the model is known as the test dataset. This dataset is unknown to the model. Therefore, the response of the developed model may be estimated on concealed data using the test dataset. The test dataset is used to evaluate the model's performance. Note: After the hyperparameters have been adjusted on top of the validation dataset, the model is always exposed to the test dataset.

⌚ Explain how Sigmoid and Softmax functions differ.

Based on how they are used to classify tasks in machine learning, sigmoid and softmax functions are different. For binary classification, the Sigmoid function is utilised, whereas for multi-classification, the Softmax function is employed.

⌚ Explain F1 score?

A binary classification model's overall accuracy is gauged by the F-score or F1-score. Understanding precision and recall, two additional accuracy measures, is essential before fully

grasping the F1-score.

Precision is calculated as the ratio of True Positives to all of the positive classifications that the model predicts will occur.

Precision = (No. of True Positives / No. True Positives + No. of False Positives)

Recall is defined as the ratio of the number of actual positive labelled data provided to the model to the total number of True Positives.

F1-score = 2 × (Precision × Recall) / (Precision + Recall)

⌚ Is High variance in data set is good or bad if bad how will you handle it?

A higher variance directly indicates that the characteristic has a wide range of data and that the data dispersion is large. High volatility in a feature is typically regarded as being of lower quality.

We could use the bagging algorithm to handle datasets with high variation. The data are divided into groups using the bagging technique, which replicates sampling from random data. Following the data's division, rules are developed using a training process on random data. The model's projected outcomes are then combined using the polling procedure.

⌚ What do you mean by Parametric and Non-Parametric Models?

Only the model's parameter needs to be known in order to forecast new data because parametric models have fewer parameters.

Non-parametric models are more flexible and can forecast new data because there are no restrictions on the amount of parameters they can use. You must be aware of the data's current

condition and the model's parameters.

⌚ What do you mean by Type I and Type II errors?

Type I: False positive, also known as a Type I mistake, occurs when a true condition is not accepted by the results of a test.

An example of a false positive is when someone is diagnosed with depression even though they are not actually depressed.

Type II: False negative Type II errors occur when a test's result indicates that a false hypothesis was accepted.

For instance, a person's CT scan can indicate that they don't have an illness when in fact they do. In this case, the test accepts the erroneous assumption that the subject is

disease-free. False negative in this instance.

Explain Dimensionality Reduction?

Machine learning models are constructed using features and parameters in the real world. These characteristics may have numerous dimensions. Sometimes the features might not matter, making it challenging to visualise them.

With the aid of primary variables, dimensionality reduction is then employed to eliminate pointless and redundant features. These primary variables are a subset of the parent variables and preserve their characteristics.