Master Data Structures & Algorithms with Logicmojo DSA Course

Learn DSA for 4 months with System Design for 3 months, and get placed into product-based companies after completing the course.

1

function quickSort(arr, left = 0, right = arr.length - 1) {

2

if (left < right) {

3

const pivotIndex = partition(arr, left, right);

4

quickSort(arr, left, pivotIndex - 1); // Sort left half

5

quickSort(arr, pivotIndex + 1, right); // Sort right half

6

}

7

return arr;

8

}

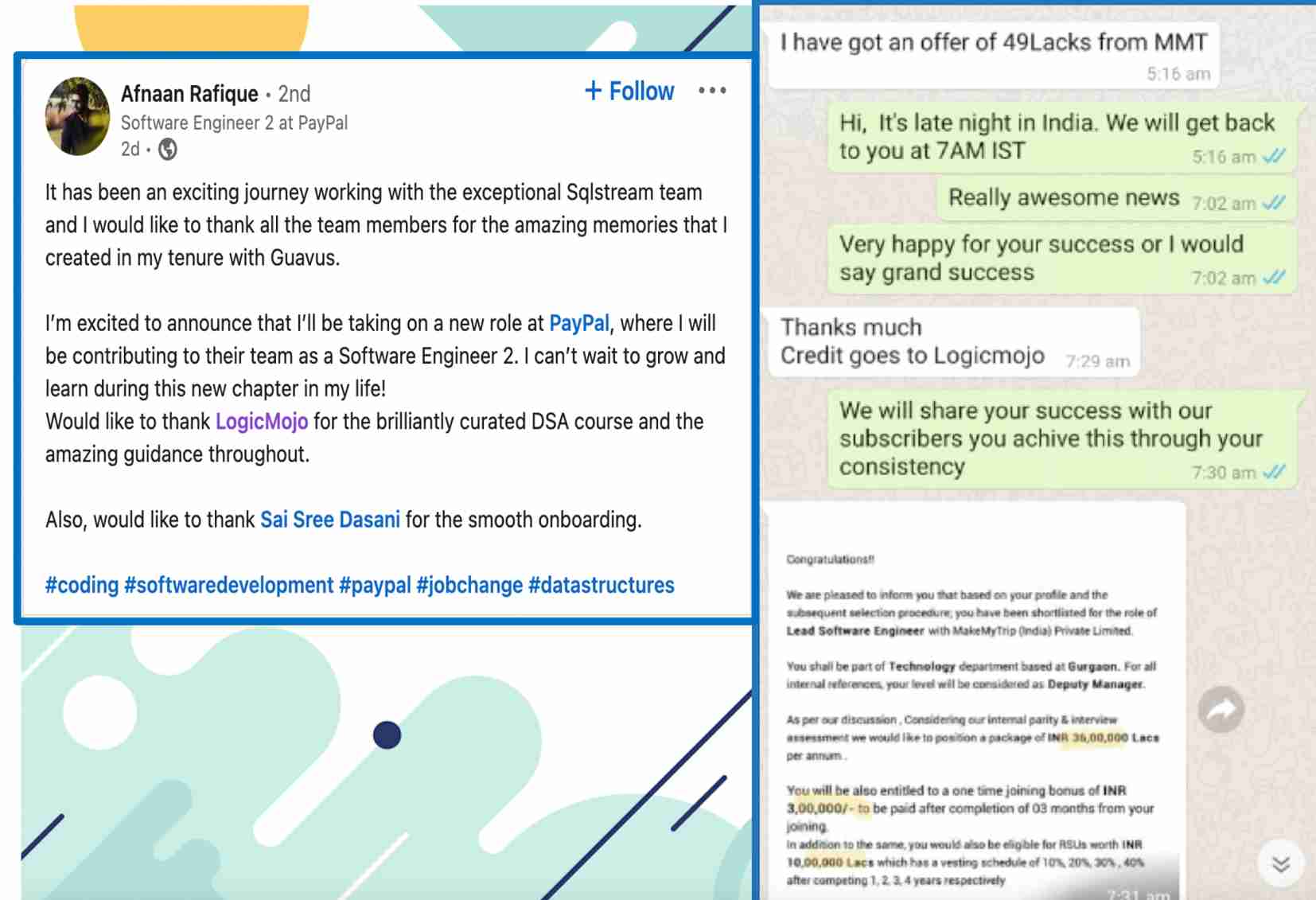

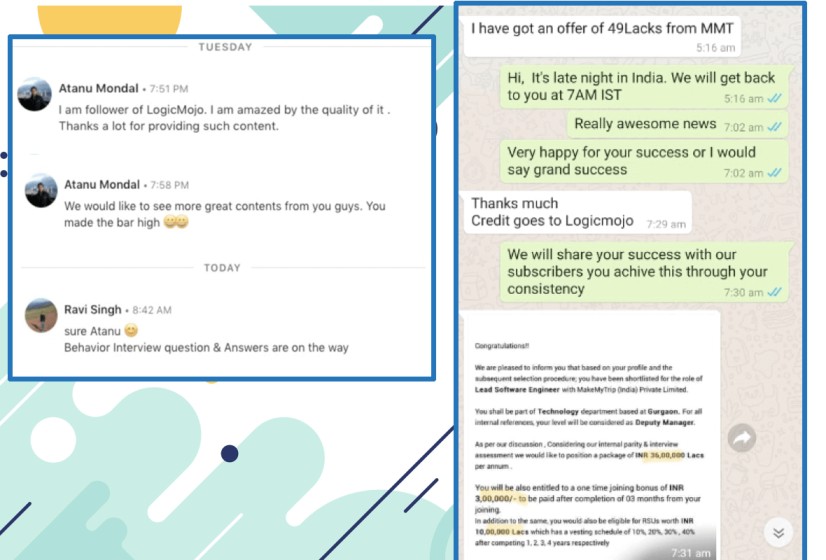

Our students have gotten job offers from:

"

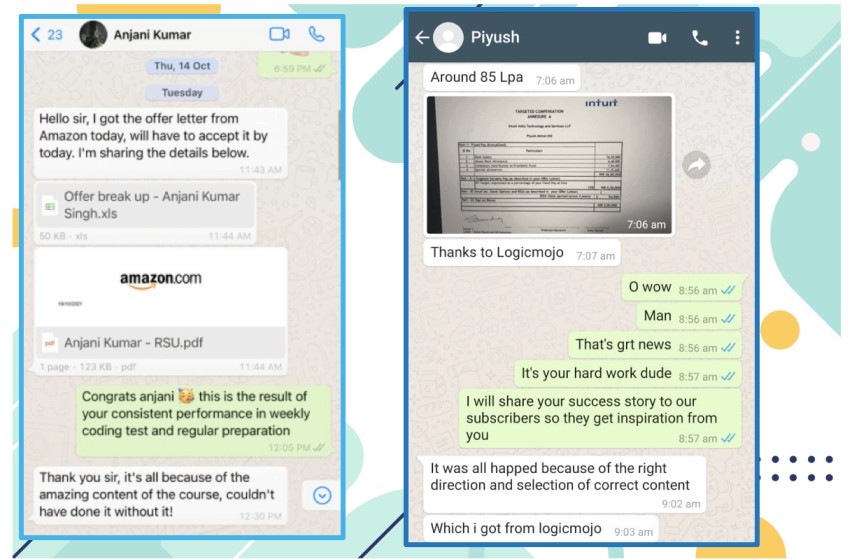

Amazon

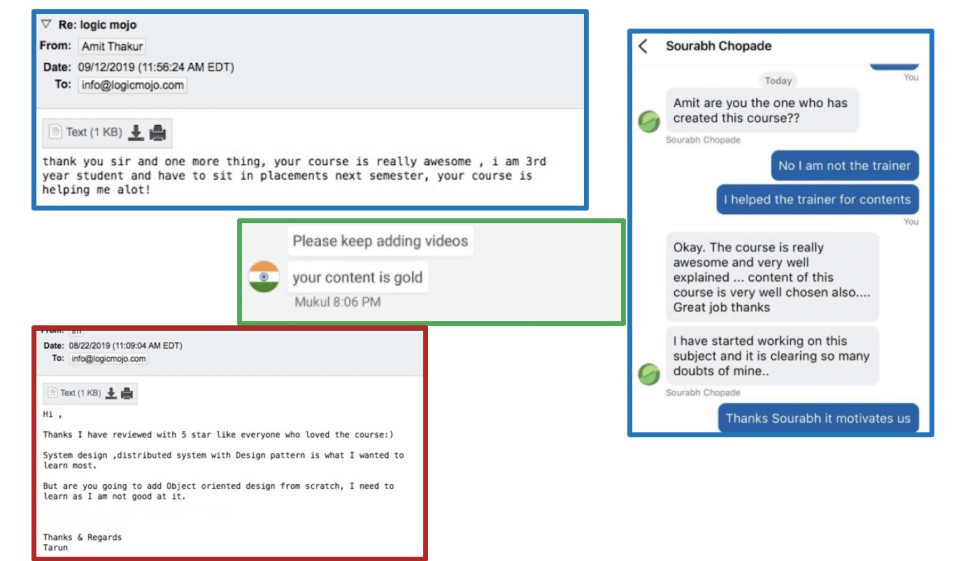

Must recommend for the aspirants who are preparing for Amazon, Google, Microsoft, and Top Product Based Company interview. Logicmojo focus on techniques of solving data structures problems

Anjani Kumar

"

Microsoft

The course curriculum is of the best quality along with the best learning experience from my tutor. Best course to prepare for MAANG companies interview. I cracked Paytm, Adobe, Salesforce, and Microsoft. Finally joined Microsoft at 1.3 Cr LPA Hyderabad MS IDC

Piyush

"

WalmartLabs

Excellent course for interview preparation, very straight to the point ,in depth coverage of every point. Nice way of explaining solutions to very complex problems in easy way. It helps me to land at Architect Position in WalmartLabs as well as VISA.

Diwakar Choudhary

"

Microsoft

I would say the best part is the explanation by the instructor, concise and clear. Great quality of online materials and classes, it covers all algorithms and system design problems, design pattern asked during interviews

Aravindo Swain

"

Flipkart

Great course! Definitely helped me open some new doors in understanding how algorithms work and implementing solutions for the different exercises

Rajnish Kumar

"

Cisco

The course curriculum is of best quality along with good coding problems.It's like a quick interview preparation guide.

Priya Singh

"

WalmartLabs

Excellent course for interview preparation, very straight to the point with in depth coverage of every DSA topic. The instructor explains complex problems in a simple way. It’s a best course if you are aiming for the best paying jobs in tech.

Diwakar Choudhary

"

Microsft

Very well arranged course with excellent lectures. It focuses on core concepts and techniques instead of grinding thousands of LeetCode problems. The problem set is more than enough for top tech interviews, including full stack developer roles.

Siddharth Pande

-Logo.wine.svg)

-Logo.wine.svg)

Live online interactive sessions

Live online interactive sessions