Logicmojo - Updated Jan 16, 2024

Logicmojo - Updated Jan 16, 2024

"Normalization" is a word widely used in Database Management System. Most of the interviewers pose questions based on this concept. This article throws light on this concept in detail.

Data duplication could occur in a large database defined as a single relation. This data repetition could lead to:

growing relationships tremendously.

Maintaining and updating data is difficult since it necessitates searching through numerous related entries.

Waste and inefficient use of resources and disc space.

Errors and inconsistencies are more likely to occur.

In order to solve these issues, we need to examine and break down relations with duplicate data into smaller, more manageable, and well structured format to fulfil our requirements.

This is done through different steps and the process is known as Normalisation.

What is Normalization?

The process of structuring of data belonging to a database is called as Normalization.

The use of normalization techniques decreases the redundancy present in a relation or collection of relations in a database.

The removal of unwanted features like Insertion, Update, and Deletion Anomalies is another purpose for it.

This process breaks tables which are huge and complex into small tables and creates a link among the newly formed small tables with the help of relationships.

Redundancy in the database table is reduced by using different Normal Forms.

Why do we need Normalization?

When a database does not function in a specific way, it gives rise to different anamolies. Anamolies, literally, means abnormalities.

These abnormalities pose as a bad dream for any database administrator.

Getting rid of these abnormalities is the major justification for normalizing the relationships.

A set of recommendations called "normalization" can help you create a solid database structure.

The anamolies occuring while modifying database can be classified briefly into three types.

✧Insertion Anamolies: This anamoly is caused when insertion of a new data(tuple) inside a relationship is not possible because of absence of some data.

✧Updation Anamolies: When numerous rows of data must be updated in order to update a single data value, this is known as an update anomaly.

✧Deletion Anamolies: The term "deletion anomaly" describes a circumstance in which some vital data is unintentionally lost when some other data is deleted.

Types of Normal Form

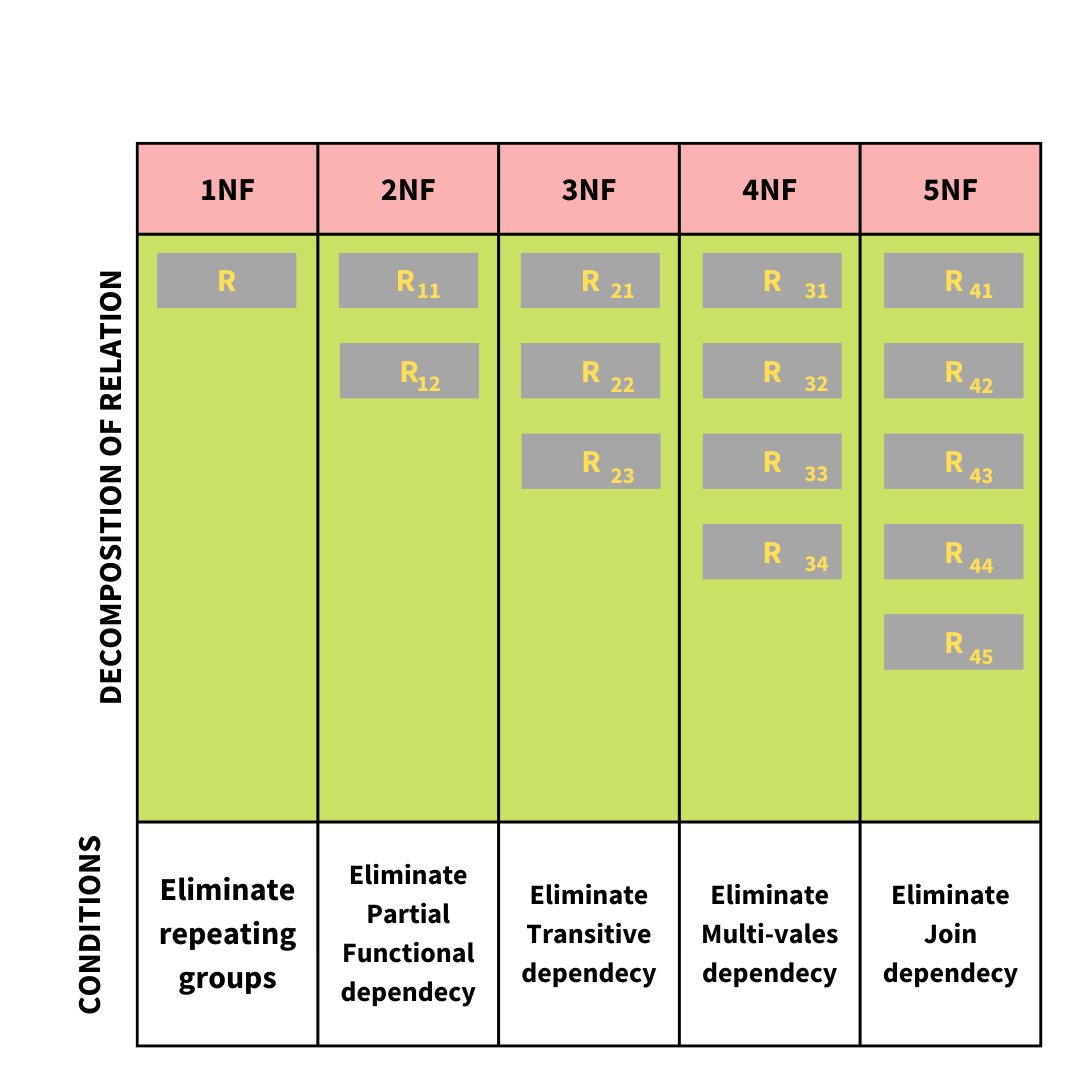

Normal forms refer to the stages that normalisation goes through.

The standard forms apply to interpersonal relationships.

If a relation fulfils certain constraints, it is said to be in a certain normal form.

There are a total of five(5) normal forms that are in practice. They are named as 1NF, 2NF, 3NF, 4NF and 5NF. The database is passed through each normal form one after the other. Note that data can only be fully normalized if it undergoes all the normal forms and passes all the tests.

The table below describes a brief of how the tables are broken down in each normal form

| Normal Forms | Description |

|---|---|

| 1NF | Any relation is said to be in 1NF if it contains only atomic values. |

| 2NF | Any relation is said to be in 2NF only if it is in 1NF AND all other attributes except the primary key are fully functionally dependent on the primary key. |

| 3NF | A relation is said to be in 3NF only if it is in 2NF AND there is no transition dependency between any attributes. |

| 4NF | A relation is said to be in 4NF only if it is in BCNF(will be discussed further) and the relation doesn't consist of any multivalued dependency |

| 5NF | A relation is said to be in 5NF only if it is in 4NF and the relation does not have any join dependancy, i.e. any join performed with respect to the attributes must not cause any loss. |

Note: BCNF is a special Normal form that is created because of some drawbacks in the 3NF which will be discussed further.

Some Important Concepts

Before getting into the different levels of normal forms let us focus on some concepts that are required to udnerstand the normal forms.

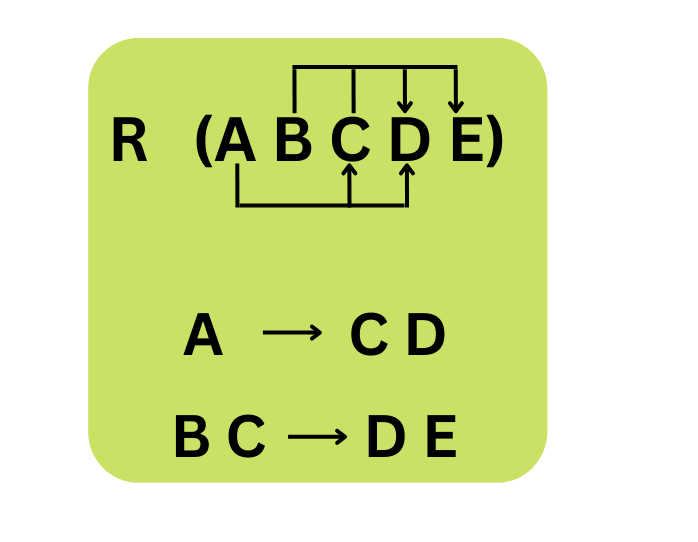

Functional Dependancy

A relation between any two attributes in a database table is called a functional dependency. It often exists in a table between the primary key and a non-key attribute.

A → B

This is the representation of a functional dependancy. Here, attribute B is functionally dependant on A. The left side of the arrow mark is called the determinant, while the right side is called as the dependent.

Let us look into an example to understand this better, Consider a table containing the data of students with the attributes student_id, student_name, student_class

| student_id | student_name | student_class |

|---|---|---|

| 101 | Anitha | 5A |

| 102 | Ankitha | 5A |

| 103 | Aryan | 5A |

| 104 | Brijesh | 5A |

| 105 | Dinesh | 5A |

In this table, The student_id can be considered as a primary key because it identifies each tuple uniquely. If we are aware of the student_id we can tell the name of the student. This can be written as

student_id → student_name

This implies that the student_name is functionally dependant on the student_id.

Types of Functional Dependency

Trivial Functional Dependency: Any relation between A and B is said to be a Trivial functional dependency if B is held by A and B is a subset of A.

Relations like A is held by A(A → A) and B is held by B(B → B)

For example, In the above table {student_id, student_name} → student_id can be considered as a trivial functional dependency. This is because student_id is a subset of {student_id, student_name}. Similarly, student_id → student_id and student_name → student_name are said to be in trivial functional dependancy.

Non-Trivial Functional Dependency: Any relation between A and B is said to be a Non-Trivial Functional dependency if B is held by A and B is not a subset of A.

For example, student_id → student_name and student_name → student_dob.

Inference Rules

The fundamental axioms of Armstrong provide the basis for inference rules. The Functional dependencies that are present in a relational database are deduced using Armstrong's axioms. Inference rule can be taken to be as a kind of assertion. It can be used to derive additional functional dependencies from a set of FDs. It can also be used to infer many functional dependencies in addition to the ones already present, from the initial set.

There are 6 inference rules present for functional dependency

IR1 - Reflexive Rule: According to this rule, if B(a set of attributes) is a subset of A(another set of attributes), then B is held by A.

If A ⊇ B, then A → B

IR2 - Augmentation Rule: According to this rule, B is held by A. Then BC is held by AC for any set of attributes C. This is also knows as the partial dependency rule.

If A → B, then AC → BC

IR3 - Transitive Rule: According to this rule, B is held by A and C is held by C. Then C must also be held by A for any set of attributes A, B and C.

If A → B and B → C, then A → C

The other 3 Inference rules are derived from the above inferences rule

IR4 - Union Rule: According to this rule, B is held by A and C is also held by A. Then both B and C together is also held by A.

If A → B and A → C, then A → BC //This can be proved by the following steps. 1. A → B //given 2. A → C //given 3. A → AB //using IR2 on 1 by augmenting it with A. Where AA = A 4. AB → BC //Using IR2 on 2 by augmenting it with B. 5. A → BC //Using IR3 on 3 and 4

IR5 - Decomposition Rule: According to this rule, if BC is held by A, Then B is held by A and C is also held by A. This is also called as the project rule.

If A → BC , then A → B and A → C //This can be proved by the following steps. 1. A → BC //given 2. BC → B //using IR1 3. A → B //using IR3 on 1 and 2 4. BC → C //using IR1 5. A → C //using IR3 on 1 and 4

IR6 - Pseudo Transitive Rule: According to this rule, if B is held by A and D is held by BC, then D is also held by AC.

If A → B and BC → D, then AC → D //This can be proved by the following steps. 1. A → B //given 2. BC → D //given 3. AC → BC //using IR2 on 1 4. AC → D //using IR3 on 2 and 3

1NF

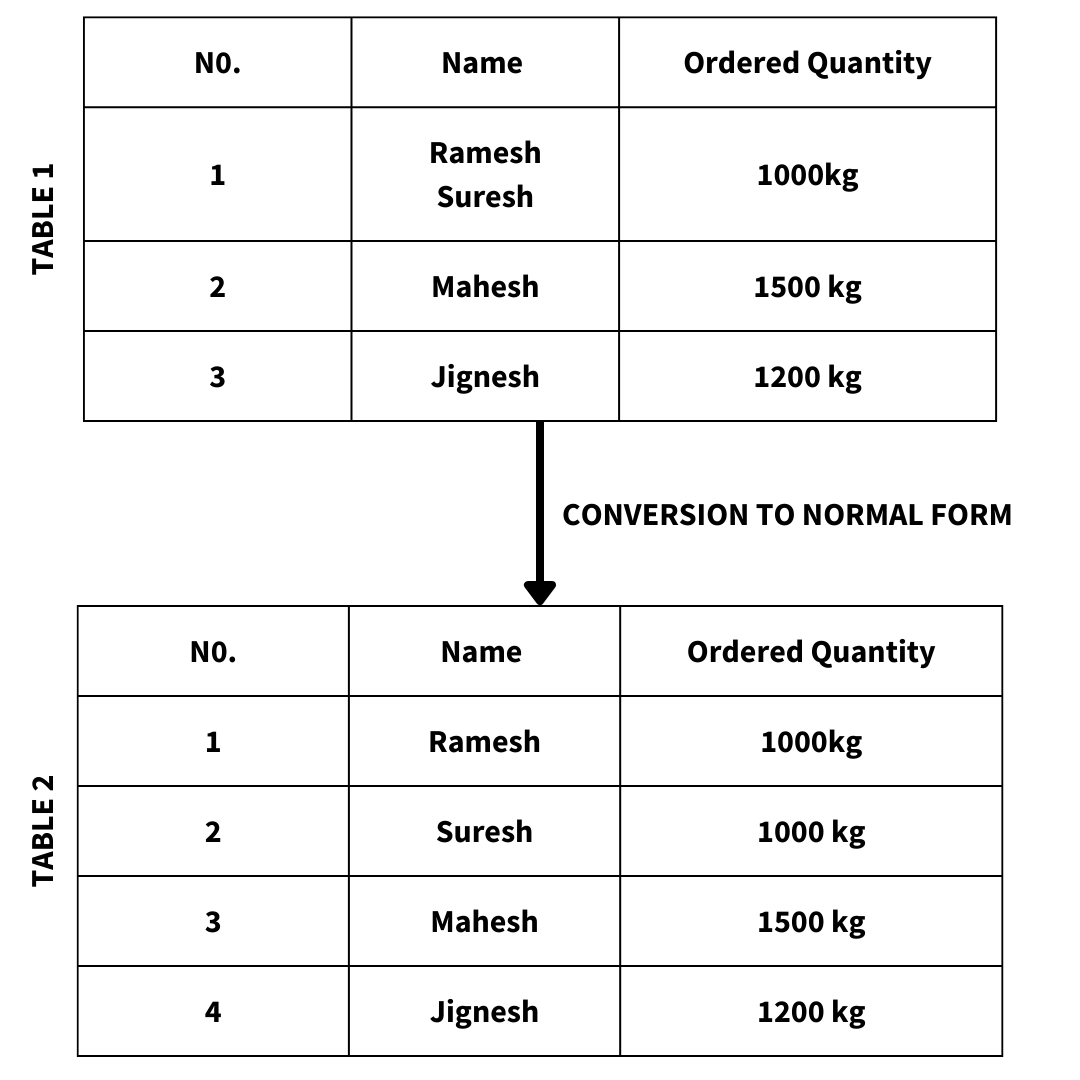

The first Normal Form or 1NF is the most simple normal form.

It specifies that any attributes should not have complex values. All the values should be broken to their units or atomic values.

This can be understood with the help of an example.

| student_id | student_name | student_subjects |

|---|---|---|

| 101 | Anitha | Math, Science |

| 102 | Ankitha | Science, English |

| 103 | Aryan | Math |

This table is transformed into the below table after the application of 1NF.

| student_id | student_name | student_subjects |

|---|---|---|

| 101 | Anitha | Maths |

| 101 | Anitha | Science |

| 102 | Ankitha | Science |

| 102 | Ankitha | English |

| 103 | Aryan | Math |

2NF

One of the major conditions in 2NF is that the relation must be in 1NF.

In the 2NF all the non key attributes present in the relation must be fully functionally dependant on the primary key of the relation. For example, Let us consider the student table obtained in 1NF.

| student_id | student_name | student_subjects |

|---|---|---|

| 101 | Anitha | Maths |

| 101 | Anitha | Science |

| 102 | Ankitha | Science |

| 102 | Ankitha | English |

| 103 | Aryan | Math |

In this table we can say that there are no primary keys because the student_id is not unique for every tuple. But student_id can be considered a candidate key. We see that the student_name is dependant on student_id which is a candidate key and not on any primary key. This violates the 2NF. This can be solved by breaking the table into two different tables as shown below.

Student_Detail Table

| student_id | student_name |

|---|---|

| 101 | Anitha |

| 102 | Ankitha |

| 103 | Aryan |

| student_id | student_subjects |

|---|---|

| 101 | Maths |

| 101 | Science |

| 102 | Science |

| 102 | English |

| 103 | Math |

3NF

If a relation is in 2NF AND does not have any transitive partial dependencies, it is said to be in 3NF.

The amount of duplicate data present in a database is decreased with 3NF. Additionally, it is employed to ensure data integrity.

A relation is in 3NF if the non-prime attributes are not tranisitively dependant on any candidate keys.

A relation can be considered to be in 3NF if atleast any one of the following constraints for every non-trivial dependency given by A→B

* A is a super key

* B is part of some candidate key, i.e. B is a prime attribute.

Let us try to understand this with the help of an example

Consider the Following Table which has the address of the student in 3 differenct columns namely student_state, student_city and student_pincode.

| student_id | student_name | student_state | student_city | student_pincode |

|---|---|---|---|---|

| 101 | Ananya | Karnataka | Mysore | 570010 |

| 102 | Anitha | Karnataka | Bengaluru | 560010 |

| 103 | Ankitha | Tamilnadu | Chennai | 600010 |

| 104 | Aryan | Andhra | Tirupati | 517502 |

| 105 | Brijesh | Tamilnadu | Coimbatore | 641001 |

This table has the following super keys (set of keys that can recognise a tuple or a row of data uniquely).

{student_id}, {student_id,student_name},{student_id, student_name, student_state},.....so on.

{student_id} is the Candidate Key (an attribute that identifies the tuple uniquely). All the other attributes are non-prime attributes.

In this table we can see that student_state and student_city are dependant on student_pincode which is inturn dependant on student_id. This forms a transitive dependancy between the non prime attributes(student_state, student_city) and candidate key(student_id) and hence violating the third point given above. Hence, this relation is not in 3NF.

We can solve this by decomposing the table into the below tables

Student_Table

| student_id | student_name | student_pincode |

|---|---|---|

| 101 | Ananya | 570010 |

| 102 | Anitha | 560010 |

| 103 | Ankitha | 600010 |

| 104 | Aryan | 517502 |

| 105 | Brijesh | 641001 |

Student_Address Table

| student_pincode | student_state | student_city |

|---|---|---|

| 570010 | Karnataka | Mysore |

| 560010 | Karnataka | Bengaluru |

| 600010 | Tamilnadu | Chennai |

| 517502 | Andhra | Tirupati |

| 641001 | Tamilnadu | Coimbatore |

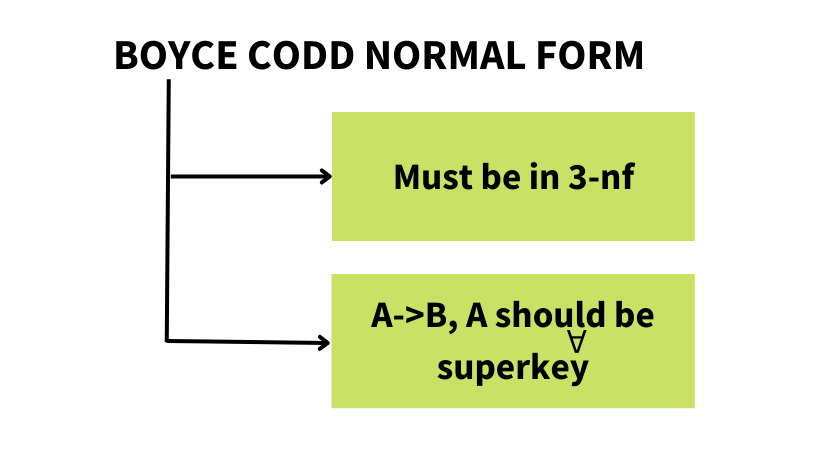

BCNF

Boyce Codd Normal Form, commonly known as BCNF, is a advanced version of 3NF. Compared to 3NF, BCNF performs strict computations to reduce data redundancy.

A relation is said to be in BCNF, if all the functional dependancies of the form A → B, has A as a Super Key of that relation.

It is a compulsion for the relation to be in 3NF and for every functional dependency the attribute/s on the LHS is the super key.

Let us try ot understand this with the help of an example. Consider a relation for teachers and the subjects taught by them represented as given below.

| teacher_id | teacher_dept | teacher_subject | subject_code | subject_credit_points |

|---|---|---|---|---|

| T101 | Information Science | Data Structures and Algorithms in Java | SB01 | 4 |

| T102 | Computer Science | Web Development | SB05 | 4 |

| T101 | Information Science | AI & ML | SB02 | 3 |

| T103 | Mechanical | Fluid Mechanics | SB04 | 4 |

| T102 | Computer Science | Big Data Analysis | SB03 | 3 |

In this table the functional dependencies present are

teacher_id → teacher_dept teacher_subject → {subject_code, subject_credit_points}

The candidate keys are => {teacher_id, teacher_subject}

This table is not in BCNF because both LHS in the functional dependencies(teacher_id or teacher_subject) are not super keys.

To solve this issue we break the table into three tables as given below

Teacher_Department Table

| teacher_id | teacher_dept |

|---|---|

| T101 | Information Science |

| T102 | Computer Science |

| T103 | Mechanical |

Subject_Details Table

| teacher_subject | subject_code | subject_credit_points |

|---|---|---|

| Data Structures and Algorithms in Java | SB01 | 4 |

| Web Development | SB05 | 4 |

| AI & ML | SB02 | 3 |

| Fluid Mechanics | SB04 | 4 |

| Big Data Analysis | SB03 | 3 |

Teacher_Subject Table

| teacher_id | teacher_subject |

|---|---|

| T101 | Data Structures and Algorithms in Java |

| T102 | Web Development |

| T101 | AI & ML |

| T103 | Fluid Mechanics |

| T102 | Big Data Analysis |

Now these tables have super keys in each of the functional dependencies. Hence, they are considered to be in BCNF.

4NF

Any relation is said to be in 4NF if and only if it is in BCNF and it contains no multivalued dependency.

Multivalued Dependency is a type of functional dependency when any two attributes in a table are not dependent on each other, but they are dependant on another 3rd attribute in the relation.

For example, consider a functional Dependency A → B. This dependency is multivalued if B has more than one value.

Let us understand 4NF with an example. Consider the table given below.

| student_id | student_subject | student_hobby |

|---|---|---|

| S011 | Science | Reading Books |

| S012 | English | Listening to music |

| S011 | Maths | Playing guitar |

| S013 | Biology | Watching movies |

| S014 | Civics | Cooking |

In the above table, student_subject and student_hobby are independant of each other, but, both of them are dependant on student_id. Hence, the above relation is not in 4NF.

This can be removed by decomposing the tables into two tables.

Student_Subject Table

| student_id | student_subject |

|---|---|

| S011 | Science |

| S012 | English |

| S011 | Maths |

| S013 | Biology |

| S014 | Civics |

Student_Hobby Table

| student_id | student_hobby |

|---|---|

| S011 | Reading Books |

| S012 | Listening to music |

| S011 | Playing guitar |

| S013 | Watching movies |

| S014 | Cooking |

5NF

A relation is said to be in 5NF if and only if it is in 4NF and it should be free of any join dependencies and there shouldn't be any losses while perfoming the join function.

The relation can be considered to be in 5nf if it has been broken down to as many tables as possible and it has passed through all the Normal Forms. This helps to diminish the data redundancy.

5NF is also called as Project-Join Normal Form, or PJNF

Let us try to understand this with the help of an example

| teacher_subject | teacher_name | semester |

|---|---|---|

| Maths | David | 2nd Sem |

| Maths | Arya | 2nd Sem |

| English | Arya | 2nd Sem |

| English | Ashwini | 3rd Sem |

| Science | Prateek | 2nd Sem |

According to the table above, Arya handles both maths and english classes for 2nd sem, but not for 3rd sem. To identify a valid data in this situation, a combination of all these fields is needed.

Consider a scenario in which we add a new semester say Semester 4 but are unsure of the subject or the students who will be enrolled in it, so we leave teacher_name and teacher_subject as NULL. We cannot, however, leave the other two columns empty because the three columns together function as a primary key.

Due to these issues the above relation is not in 5NF. This can be achieved by breaking the table down to three tables as given below.

Semester_Subject Table

| Semester | teacher_subject |

|---|---|

| 2nd Sem | Maths |

| 2nd Sem | English |

| 2nd Sem | Science |

| 3rd Sem | English |

Teacher_Subject Table

| teacher_subject | teacher_name |

|---|---|

| Maths | David |

| Maths | Arya |

| English | Arya |

| English | Ashwini |

| Science | Prateek |

Semester_Teacher Table

| teacher_name | semester |

|---|---|

| David | 2nd Sem |

| Arya | 2nd Sem |

| Arya | 2nd Sem |

| Ashwini | 3rd Sem |

| Prateek | 2nd Sem |

Joining the above tables together gives us the previous table. Hence there is lossless joining.

Advantages of Normalization

Normalization helps to solve many problems faced during database administration. Some of the advantages of Normalization are:

Redundancy of data is highly reduced due to Normalization

Since the relations are broken down into smaller units, organization of data becomes much easier

Normalization removes all the unwanted anomolies, hence data becomes more consistent.

The design of the database and the arrangement of data becomes more flexible.

Even thought the database undergoes a lot of changes in the way it is organised, relations between the data are still maintained and are integrate.

Disadvantages of Normalization

Though Normalization proposed many positive outcomes, it can also lead to many drawbacks. Some of them are:

The user requirements must be known before the construction of the database

As the process continues, normalization becomes tough in higher levels, like 4NF or 5NF. This leads to the degradation of performance.

Decomposition needs extra efforts to be put in because if the quality of decomposition decreases, the database design may be affected in a pretty bad way, this might propose greater problems than that were existent.

Conclusions

This concludes our discussion of "Normalization in DBMS" I sincerely hope that you learned something from it and that it improved your knowledge. You can consult the DBMS Tutorial if you want to learn more about DBMS.

Good luck and happy learning!

Frequently Asked Questions (FAQs)

In database management systems (DBMS), normalization is the process of arranging a database schema in order to reduce duplication, enhance data integrity, and increase query performance. A massive table must be divided into smaller, more manageable tables, and connections must be made between them. By ensuring that each piece of data is kept only once and minimizing data redundancy, normalization seeks to decrease anomalies and inconsistencies.

A thorough description of normalization in DBMS is provided below:

1. Data Redundancy: When the same data is duplicated throughout a database, redundancy results. Due to this redundancy, the database may be difficult to maintain and update and may waste storage space. By separating data into several tables, normalization seeks to get rid of or reduce redundancy.

2. Normal Forms: In most cases, normalization is accomplished by adhering to a set of guidelines known as normal forms. The First Normal Form (1NF), Second Normal Form (2NF), Third Normal Form (3NF), and Boyce-Codd Normal Form (BCNF) are the normal forms that are most frequently utilized. To reach a higher level of normalization, a number of requirements particular to each normal form must be satisfied.

3. Functional Dependencies: A database table's functional dependencies are the connections between its characteristics. They outline the relationships between the attributes in a table. Functional dependencies guide the decision of how to partition the table into smaller, more normalized tables by assisting in the identification of the key attributes.

4. Advantages of Normalization:

- Elimination of Data Redundancy: Normalization ensures that each data item is stored in only one location by removing redundant data. Data integrity is

improved and the likelihood of data discrepancies is decreased.- Increased Data Integrity: Normalization allows for the enforcement of data integrity restrictions including primary key and foreign key relationships. This guarantees the consistency and accuracy of the data.

- Simplified Database Maintenance: Because changes are restricted to fewer, more narrowly focused tables, normalized databases are simpler to update, insert, and delete data from. The upkeep of databases is made easier as a result.

- Better Query speed: By lowering the number of tables that must be queried and the amount of data that must be processed, normalization can improve query speed. It makes indexing and querying processes more effective.

5. Levels of Normalization: Each normal form denotes a higher level of normalization, with more stringent guidelines for table design. Increasing degrees of data integrity and a decrease in data redundancy are ensured by switching from lower to higher normal forms. Higher normal forms, however, can result in more intricate table linkages and joins, which could affect query performance and maintenance complexity. The degree of normalization used is determined by the database's particular requirements and intended use.

The practice of normalization is essential to database architecture since it helps to guarantee data accuracy, consistency, and effectiveness. Normalization enhances a database's overall quality and dependability by removing duplication and creating clear links across tables. Achieving a high level of normalization while taking into account the performance and maintenance implications must be balanced.

First Normal Form (1NF), Second Normal Form (2NF), Third Normal Form (3NF), and Boyce-Codd Normal Form (BCNF) are the four primary methods of database normalization. Each normalization form is a step-by-step progression in data organization, assisting in the eradication of anomalies and redundant data. Here is a description of each normalization type:

1. First Normal Form (first 1NF)): First Normal Form focuses on removing redundant data and arranging data into atomic value tables. A table must have a primary key that identifies each record in order to achieve 1NF, and each column can only hold atomic (indivisible) values. This form prevents repeated groups of data within a record and makes sure that each attribute in a table only has one value.

2. Second Normal Form (2NF): By addressing partial dependencies, Second Normal Form improves on First Normal Form. If a table is in 1NF and does not have any partial dependencies, it is in 2NF. When an attribute is dependent only on a portion of a composite primary key, this is known as a partial dependency. Non-key attributes that only partially rely on the primary key should be transferred to a different table with a foreign key relationship in order to achieve 2NF.

3. Third Normal Form (3NF): Third Normal Form addresses transitive dependencies, taking it a step further. If a table is in 2NF and doesn't have any transitive dependencies, it is in 3NF. When two attributes that are not a part of the main key are dependent on one another, this is known as a transitive dependency. Non-key attributes that rely on other non-key attributes should be transferred to a different table in order to achieve 3NF.

4. Boyce-Codd Normal Form (BCNF): The Boyce-Codd Normal Form is a sophisticated normalization method that addresses relationships that are more intricate. If every determinant—an attribute that influences another characteristic—in a table is a potential key, then the table is in BCNF. By checking for non-trivial functional connections between attributes, BCNF ensures that none exist. If a table has several candidate keys, additional decomposition may be necessary to satisfy BCNF requirements.

Higher normalization forms exist beyond BCNF, but they are less frequently used in practice (4NF, 5NF, and 6NF, for example). Depending on the particular database design needs, achieving higher levels of normalization can add complexity and may not always be necessary or possible.

Normalization lessens duplication, enhances query efficiency, and helps assure data integrity. Normalization lays the groundwork for a well-planned and effective database by arranging data into properly arranged tables and forging appropriate linkages. The complexity of the data and the particular requirements of the application using the database determine the normalization level to be used.

In the development of databases, normalization is used to reduce data duplication, enhance data integrity, and enhance query performance. It offers a methodical method for arranging data into neatly organized tables, minimizing data anomalies and inconsistencies. Here is a thorough justification for normalization:

1. Elimination of Data Redundancy: When the same information is kept in several locations within a database, redundant data is created. Redundancy consumes storage space and results in inconsistent data. By dividing tables into more manageable, smaller components, normalization helps eliminate or reduce data redundancy. Each piece of data is kept in a single location, minimizing the possibility of discrepancies and improving the effectiveness of data maintenance and updates.

2. Enhanced Data Integrity: Data accuracy, consistency, and reliability are all referred to as data integrity. By establishing connections between tables through primary key and foreign key restrictions, normalization aids in enforcing data integrity. Normalization guarantees that data is correct and consistent across the database by separating data into distinct tables and eliminating duplication. It stops update anomalies, such as data contradictions or inconsistencies, from happening when redundant data is changed in one location but not in another.

3. Improved Query Performance: Normalization helps make database queries run more quickly. Efficient indexing, join, and retrieval process is made possible by normalized data organization. Relationships between normalized tables are well established, which enables the database management system to improve query execution plans. Normalization decreases the quantity of data that has to be processed, resulting in faster and more effective query processing by minimizing data redundancy and assuring correct indexing.

4. Easier Database Maintenance: Normalization makes database upkeep easier. Modifications, changes, and data entry are made simpler by splitting up complicated tables into smaller, more targeted ones. Since normalized tables are less likely to experience update anomalies, data maintenance is easier. The system is more manageable and error-prone when changes to the database structure are limited to certain tables.

5. Scalability and Flexibility: Normalization helps databases be more flexible and scalable. Normalization makes it possible for effective data management and expansion as the database expands in size and complexity. It is possible to create new tables without impacting the data already present, and to change only a portion of the database without changing the system as a whole. This makes it simpler to modify the database to meet changing needs and take into account upcoming modifications.

6. Standardization and Consistency: Normalization ensures standardized and consistent data structure throughout the database by adhering to a set of rules and norms. It offers a systematic approach to database design, simplifying the database's understanding and use for developers and administrators. Normalization encourages excellent practices and aids in the long-term preservation of data integrity and consistency.

Overall, normalization helps databases be better in terms of quality, performance, and maintainability. It reduces data duplication, upholds data integrity, improves query speed, streamlines upkeep, supports scalability, and provides uniform data organization. Databases become more effective, dependable, and flexible to changing needs by adhering to normalization standards.

The complexity of the data, the application-specific needs, and the trade-offs between normalization and performance all play a role in determining the proper level of normalization for a database. Here is a step-by-step procedure to assist you in selecting the appropriate level of normalization:

1. Recognize the Information and Requirements:

Learn everything you can about the application's requirements and the data that has to be kept. Identify the system's constituent entities, properties, and relationships. Think about the database's objectives, the kinds of operations and queries that will be carried out, and any particular performance constraints.

2. Analyze the Dependencies: Look at how functionally related the attributes in the data are. Determine the primary key(s) and how additional qualities are related to the primary key(s). Find out if there are any transitive or partial dependencies that need to be addressed.

3. Apply the 1NF (First Normal Form):

Make sure the data is arranged into atomically-valued tables with a primary key for each table. Think about breaking them up into other tables if there are repeated groupings or multi-valued properties.

4. Assess the Second Normal Form:

Look for non-key qualities that only partially rely on the primary key. If so, add a foreign key relationship to the original database and relocate these properties to other tables.

5. Examine whether there are transitive connections between non-key properties using the Third Normal Form (3NF). Consider transferring an attribute to a different table if it depends on another non-key property rather than the main key directly.

6. Evaluate the BCNF (Boyce-Codd Normal Form):

Check to see whether there are any non-trivial functional relationships among the characteristics. If yes, make sure that each variable is a potential key. Decomposition may need to be done again if there are several candidate keys.

7. Fourth Normal Form (4NF) evaluation: Look for non-trivial multi-valued relationships between attributes. Consider transferring the dependant characteristics to a different table if there are any such dependencies.

8. Take Performance and Practicality into Account: Consider the effects that higher levels of normalization will have on performance. Higher normalization levels could necessitate more sophisticated queries and extra joins, which could affect query performance. Based on the complexity of the data and the work necessary for upkeep, determine if the chosen level of normalization is viable.

9. Strike a balance between performance and normalization: Maintain a healthy balance between performance and normalization. Overly tight normalization might result in more complex queries and significant performance problems, even though it does help to improve data integrity and minimize redundancy. When denormalization techniques are required to improve query performance, such as when adding calculated fields or summary tables, take them into consideration.

It's important to understand that normalization is not a one-sided strategy. The specific context and requirements of the database will determine the necessary amount of normalization. Aim for a level of normalization that protects data integrity and reduces redundancy while taking the application's practicality and performance requirements into account. As the application develops and requirements change, examine and alter the normalization level frequently.

A schema is the logical structure or blueprint that specifies the organization, relationships, and constraints of a database in the context of a database management system (DBMS). It outlines the general structure and organization of the data and offers a foundation for developing and maintaining database objects including tables, views, indexes, and relationships.

Here is a thorough explanation of a DBMS schema:

1. Schema Definition: A schema is a container for database items that determines the database's logical structure. It details the database's tables, properties, data types, relationships, and restrictions. The schema gives a broad overview of the database, outlining the structure rules and how the data is organized.

2. Schema components:

The schema is made up of a number of parts, including:

- Tables: These represent the major database entities and specify the format for storing data.

- Attributes: These are the specific data items that make up a table and describe the attributes and traits of the data.

- Relationships: These establish the connections between relevant data and define the associations between tables.

- Constraints: These impose regulations and limitations on the data, such as uniqueness, primary key, and foreign key constraints.

- Views: Views are virtual representations of the data from one or more tables that can be altered.

- Indexes: By generating effective lookup structures, they improve the performance of data retrieval.

3. Logical vs. Physical Schema: There are two other types of schemas: logical and physical.

- Logical Schema: The logical schema explains how the database is logically organized overall, including the relationships, constraints, and tables. Independent of the physical storage or implementation specifics, it concentrates on the conceptual view of the data.

- Physical Schema: The physical schema specifies how the database will really be stored and implemented, including the file structures, indexing systems, and access techniques. It deals with how data is physically represented on storage devices.

4. Database Instance: A database instance depicts a particular instantiation of a schema at a specific time. It is made up of the actual data kept in the database and reflects how the database is currently set up. The same schema can support multiple instances, each with their own set of data.

5. Schema Management: Schema management refers to the creation, modification, and deletion of database items specified inside the schema. These operations can be carried out by database administrators (DBAs) or authorized users to modify the schema in response to changing requirements or adjustments made to the data model.

6. Schema Security and Access Control: Within a DBMS, schemas also contribute to security and access control. The owner of the schema controls which users and roles have access to and control over the data included in the schema by granting and removing privileges. This promotes data integrity and helps enforce data security.

The DBMS's schema acts as a guide for setting up, administering, and organizing the database. Developers and administrators can establish and manage the structure of the database objects since it gives the data a logical representation. The schema outlines the columns, properties, relationships, and constraints that the database system will use to store, retrieve, and manipulate data.

To convert a table from the First Normal Form (1NF) to the Second Normal Form (2NF), you must first detect and address partial dependencies. When a non-key attribute is dependent on just a portion of the primary key, this is known as a partial dependency. You can get 2NF and get rid of partial dependencies by breaking the table up into distinct tables for the dependent characteristics. Here is a step-by-step tutorial on how to change 1NF into 2NF:

1. Recognize the 1NF Table: Examine the dependencies and structure of the 1NF table. The primary key and all other properties should be identified. Check for any partial dependencies, which occur when non-key attributes rely on only a portion of the primary key.

2. Determine Partial Dependencies: Determine which non-key qualities only partially depend on the primary key. These are the properties that need to be transferred to different tables since they cause partial dependencies.

3. Create New Tables: Make a new table for each set of dependent attributes. Include the primary key component(s) that correspond to the attribute(s) on which they depend. Each table will have a complete key dependency as a result of this.

4. Specify Primary and Foreign Keys: Specify the primary key in the old database that contains the properties that were not transferred to the new tables. Define foreign keys in the new tables that refer to the primary key in the old table. The connections between the new tables and the original table are established by these foreign keys.

5. Modify the Data: Transfer the relevant information from the old table to the newly generated tables. Make that the primary and foreign key relationships are used to link the data appropriately.

6. Update Queries and Applications: Adjust any queries, programs, or code that communicate with the table to take into account the normalization process' adjustments. Update the queries to incorporate the joins between tables that are required to retrieve the needed data.

7. Check for 2NF Compliance: Make sure each non-key attribute in the new tables depends on the complete primary key and not just a portion of it. Verify that no partial dependencies remain after the conversion by performing a comprehensive examination.

By removing partial dependencies, you can use this procedure to change a table from 1NF to 2NF. Separate tables for dependent attributes will be included in the resultant structure, allowing for better data organization, increased data integrity, and decreased redundancy. It is crucial to remember that normalization must be carried out properly and with consideration for the unique requirements and properties of the data.

Normalization is a database design technique that entails using a set of principles known as normal forms to minimize data redundancy, improve data integrity, and optimize database performance. First Normal Form (1NF), Second Normal Form (2NF), Third Normal Form (3NF), Boyce-Codd Normal Form (BCNF), and Fourth Normal Form (4NF) are the five most often utilized normal forms. Each normal form is explained below:

1. First Normal Form (1NF): First Normal Form defines the fundamental requirements for a well-formed relational database. It requires that each table have a primary key and that each column in the table contain atomic values (indivisible values). 1NF assures that each column in the table includes just one value, preventing repeating groups or multiple values in the same attribute. It serves as the basis for all future regular forms.

2. Second Normal Form (2NF): The Second Normal Form extends the First Normal Form by handling partial dependencies. It argues that if a table is in 1NF and every non-key attribute is totally functionally dependent on the entire primary key, it should be in 2NF. This means that each non-key attribute must rely on the complete primary key, rather than just a subset of it. If a non-key attribute is only partially dependent on the primary key, it should be relocated to a second table with a foreign key relationship to the original table.

3. Third Normal Form (3NF): Third Normal Form extends the concept of transitive relationships. It specifies that if a table is in 2NF and there are no transitive relationships between non-key attributes, it should be in 3NF. Transitive dependencies occur when one non-key attribute is dependent on another non-key attribute rather than the primary key directly. Non-key attributes that rely on other non-key attributes should be transferred to a separate table to achieve 3NF.

4. Boyce-Codd Normal kind (BCNF): This is a tighter kind of normalization that tackles functional dependencies in a table. It specifies that a table is BCNF if each determinant (attribute that determines another attribute) is a candidate key. In other words, no non-trivial functional connections should exist between characteristics. If a table has several candidate keys, further decomposition may be required to meet BCNF requirements.

5. Fourth Normal Form (4NF): This form deals with multi-valued dependencies. It claims that if a table is in BCNF and there are no non-trivial multi-valued dependents, it should be in 4NF. When a non-key attribute is dependent on a mixture of other non-key attributes, this is referred to as a multi-valued dependency. Multi-valued dependencies should be avoided to achieve 4NF by shifting the dependant characteristics to a separate table.

The goal of these standard forms is to avoid data duplication, increase data integrity, and ensure that each piece of data is recorded only once. Database designers can develop efficient, well-structured databases that minimize data anomalies and promote efficient query processing by following the criteria of normalization. Higher normal forms exist in addition to 4NF, however they are less usually employed in practice.

A set of recommendations or rules that specify how to arrange and structure data in a database to minimize redundancy, increase data integrity, and maximize database performance is known as the normalization principle. Normalization's major purpose is to construct well-structured, efficient databases that minimize data anomalies and ensure data consistency. The following are the key principles of normalization:

1. Atomicity: Each attribute in a database table should have atomic values, which means it should only have one value. This approach ensures that each piece of data is indivisible and prevents multiple values from being stored within a single attribute.

2. Redundancy Elimination: Redundancy occurs when the same data is stored in different locations within a database. The normalization principle seeks to eliminate or reduce repetition by organizing data into different tables and linking them through connections. This saves storage space and eliminates the danger of data discrepancies or contradictions.

3. Functional Dependency: Data in a database should have functional dependencies, which means that each non-key property should be dependent on the complete primary key, not just a subset of it. This approach assures that the main key may uniquely determine the value of each attribute, eliminating data anomalies and ensuring data integrity.

4. Normal Forms: The normalization concept follows a series of normal forms, which include the First Normal Form (1NF), Second Normal Form (2NF), and Third Normal Form (3NF). Each normal form specifies the principles and standards for organizing data and gradually enhancing data integrity and organization. Databases become more robust, well-structured, and efficient by conforming to certain standard standards.

5. Establishing Relationships: Relationships between tables are critical in normalization. The normalization concept stresses the establishment of appropriate links, such as primary key and foreign key associations, to link relevant data. These connections assure data integrity, impose referential integrity restrictions, and allow for quick data retrieval and manipulation.

6. Performance Considerations: While the goal of normalization is to improve data quality and structure, performance factors should also be considered. Complex joins and queries in highly normalized databases can have an influence on query performance. To suit the application's specific objectives, a balance of normalization and speed optimization is required.

Normalization ensures that databases are organized, efficient, and retain data integrity by adhering to these principles. It eliminates data abnormalities and reduces redundancy, laying the groundwork for optimal data management and application development.

Keys are essential in identifying and establishing relationships between tables in the context of database normalization. Keys are qualities or attribute combinations that uniquely identify records within a table. They protect data integrity, enforce referential integrity, and enable fast data retrieval and manipulation. Normalization employs numerous types of keys, including:

1. Primary Key: A primary key is an identifier that is unique to each record in a database. It uniquely identifies each row and ensures that no records are duplicated. A primary key might be made up of a single characteristic or a set of attributes. It serves as the foundation for developing relationships with other tables via foreign keys.

2. Foreign Key: A foreign key is a table attribute or collection of characteristics that relates to the primary key of another table. It creates what is known as a parent-child relationship between two tables. The child table's foreign key refers to the parent table's main key, forming a link between the linked data. Foreign keys maintain referential integrity by requiring data in the child table to reference data in the parent table.

3. Candidate Key: A candidate key is a set of properties that could potentially serve as a table's primary key. It meets the uniqueness criteria by uniquely identifying each record in the table. One of the candidate keys is chosen as the primary key. The remaining potential keys can serve as substitute keys or as unique constraints.

4. Composite Key: A composite key is a primary key made up of two or more properties. It uses a combination of properties to uniquely identify records in a table. When a single attribute cannot uniquely identify records, but a mix of attributes does, composite keys are beneficial. The composite key ensures that the value combination is unique.

5. Super Key: A super key is a collection of qualities that can be used to uniquely identify records in a database. It may have more qualities than are required for uniqueness. A super key may have features that aren't required for uniquely identifying records. It is a more comprehensive identification technique than the primary key.

6. Surrogate Key: A surrogate key is a randomly generated unique identifier that is assigned to each record in a table. It is usually an integer value or a value generated by the system that has no intrinsic meaning. Surrogate keys are useful when no natural key is available to uniquely identify records. They make database management easier while also ensuring uniqueness.

Normalization keys are required for data identity, integrity, and relationship establishment. They allow for quick data retrieval, maintain referential integrity, and ensure that each entry in a table is uniquely recognized. A well-designed database may ensure data consistency and assist effective data management by utilizing primary keys, foreign keys, candidate keys, composite keys, super keys, and surrogate keys.

Indexing is a technique used in a database management system (DBMS) to improve the performance of data retrieval operations by constructing a separate data structure called an index that enables for efficient access to specific data within a table. An index is effectively a replica of a table's selected columns or attributes, together with a pointer to the table data. Here's a full description of indexing in relational databases:

1. Indexing's major Purpose: Indexing's major purpose is to speed up data retrieval activities, particularly when looking for specific values or ranges of values inside a database. Without an index, the DBMS must scan the entire database to find the desired data, which can be time-consuming and resource-intensive, particularly for big tables. Indexing allows direct access to specific rows based on the indexed values, making data retrieval faster and more efficient.

2. Index Structure: An index is often implemented as a separate data structure, such as a B-tree, hash table, or bitmap, that holds the indexed values as well as pointers to the real table records. The index structure is designed to facilitate efficient searching, insertion, and deletion operations.

3. Indexing Columns: In a database management system, columns that are often utilized in search conditions, join operations, or sorting are suitable candidates for indexing. Indexing can be done on a single column (single-column index) or on many columns (composite index). The choice of indexed columns is determined by the queries and actions performed on the data.

4. Index Creation and Maintenance: Using the relevant DBMS commands or tools, indexes are formed on one or more columns in a table. The index must be updated whenever new data is inserted, changed, or deleted from the table. This maintenance expenditure should be taken into account when determining which columns to index, as frequent updates to indexed columns might have an influence on performance.

5. Indexing Advantages:

- Reduced Disk I/O: Indexing reduces the amount of data that must be read from disk, which results in faster query performance.

- Efficient Join Operations: Indexes make join operations more efficient by enabling quick access to matching values in related tables.

- Sorting and Ordering: By keeping data in a pre-sorted manner, indexes help speed up sorting and ordering procedures.

- Constraint Enforcement: Indexes automatically enforce unique and primary key constraints, maintaining data integrity.

6. Indexing Considerations:

- Selective Indexing: Indexes should be established on columns that have a high selectivity, which means they have a wide range of different values. Indexing on low selectivity columns may not deliver considerable performance gains.

- store Overhead: Indexes take up extra store space, which can be an issue for large databases. It is important to weigh the benefits of indexing against the storage overhead.

- Query Optimization: Based on variables such as query complexity, cardinality estimations, and cost-based analyses, the DBMS query optimizer evaluates whether to employ an index. To take advantage of indexing, it is critical to evaluate query execution plans and optimize queries.

Indexing in DBMS is a strong approach that increases data retrieval performance by providing quick access to specific data within a table. The DBMS may efficiently identify and retrieve data by using indexes on appropriate columns, resulting in faster query execution and improved overall system performance.

Both the Boyce-Codd Normal Form (BCNF) and the Third Normal Form (3NF) are advanced levels of database normalization aimed at removing anomalies and ensuring data integrity. While BCNF and 3NF have certain similarities, they also have some significant variances. Here's a breakdown of each:

BCNF is a higher degree of normalization than 3NF and addresses a specific sort of dependency known as functional dependency. Every determinant (attribute or collection of characteristics that determines the values of other attributes) in a table, according to BCNF, must be a candidate key. In other words, no non-key characteristic should be operationally dependent on any candidate key's subset.

BCNF removes inconsistencies caused by non-trivial functional dependencies. It ensures that the candidate keys are used for all functional dependencies within a table. A table achieves the highest level of normalization in terms of functional dependency by adhering to BCNF. Other sorts of dependencies, such as multivalued dependencies and join dependencies, are not addressed by BCNF.

Third Normal Form (3NF): Although 3NF provides less normalization than BCNF, it nevertheless makes considerable advantages in data organization and integrity. 3NF expands on the notions of 1NF and 2NF by focusing on the elimination of transitive dependencies. It asserts that every non-key attribute in a table must be functionally dependent on the primary key, and there should be no transitive dependencies.

Transitive dependencies occur when one non-key attribute is dependent on another non-key attribute rather than the primary key directly. Such dependencies are avoided in 3NF by relocating the dependent attribute(s) to a separate table. As a result, the original table has only direct dependencies on the primary key, which improves data integrity and reduces redundancy.

The following are the distinctions between BCNF and 3NF:

1. Dependency Types: BCNF deals with functional dependencies, whereas 3NF deals with transitive dependencies.

2. Candidate Keys: BCNF demands that every determinant be a candidate key, whereas 3NF does not. 3NF only ensures that non-key attributes rely on the primary key for functionality.

3. Scope: BCNF is a stricter version of normalization than 3NF because it removes any non-trivial functional dependencies. 3NF focuses on eliminating transitive dependencies in particular.

In practice, both the BCNF and the 3NF seek to improve data integrity and reduce anomalies. The decision between BCNF and 3NF is determined by the features and requirements of the data to be modeled. BCNF is often used when there are complicated functional relationships, whereas 3NF is appropriate for the majority of applications and provides a good mix of normalization and performance.

Standardization, also known as Z-score normalization, is a data preprocessing technique that converts numerical data into a conventional normal distribution with a mean of 0 and a standard deviation of 1. It is extensively used in statistical analysis and machine learning techniques to normalize and scale data. Here is a more in-depth explanation of Z-score normalization:

1. Z-score calculation: To calculate the Z-score for a data point, subtract the mean of the dataset from the data point and then divide the result by the dataset's standard deviation. The formula is as follows:

Z = (X - μ) / σ

Where:

- Z is the data point's Z-score

- X is the data point's value.

- μ denotes the dataset's mean

- σ denotes the dataset's standard deviation

2. Z-score Normalization Process: The following phases are included in the Z-score normalization process:

a. Determine the dataset's mean () and standard deviation ().

b. To calculate the deviation from the mean, subtract the mean from each data point.

c. To get the Z-score for each data point, divide each deviation by the standard deviation.

d. The dataset will have a mean of 0 and a standard deviation of 1 after normalization.

3. Function of Z-score Normalization: Z-score normalization has two basic functions:

a. Comparison: Z-score normalization allows for meaningful comparisons between variables by putting data into a conventional normal distribution. It eliminates scale and unit disparities, allowing for fair comparisons based on relative distribution positions.

b. Outlier Detection: Z-score normalization makes it easier to identify outliers in a dataset. Potential outliers are data points with Z-scores that are significantly above or below a given threshold (e.g., 3).

4. Advantages of Z-score Normalization:

- Standardization: Z-score normalization guarantees that data from disparate sources or scales is brought to a similar scale, making comparison and analysis easier.

- Scale Bias Elimination: It eliminates any bias produced by variable scale, guaranteeing that no variable dominates the analysis only because of its magnitude.

- Compatibility with Statistical Techniques: Many statistical techniques and machine learning algorithms are predicated on the assumption that the data is regularly distributed. Z-score normalization prepares data for these procedures by ensuring variable normalcy and equal weighting.

5. Considerations:

- Z-score normalization is appropriate for data with a roughly normal distribution. Alternative normalizing approaches may be more suited if the data is severely skewed or contains outliers.

- The shape and properties of the data distribution are affected by Z-score normalization. It has no effect on the relative order or relationships of data points, but it can have an effect on the interpretation of individual data values.

Z-score normalization is a popular method for normalizing numerical data. It enables meaningful comparisons, aids in the discovery of outliers, and provides interoperability with various statistical approaches and machine learning algorithms. Z-score normalization is a significant pretreatment step in data analysis and modeling activities by translating data to a conventional normal distribution.