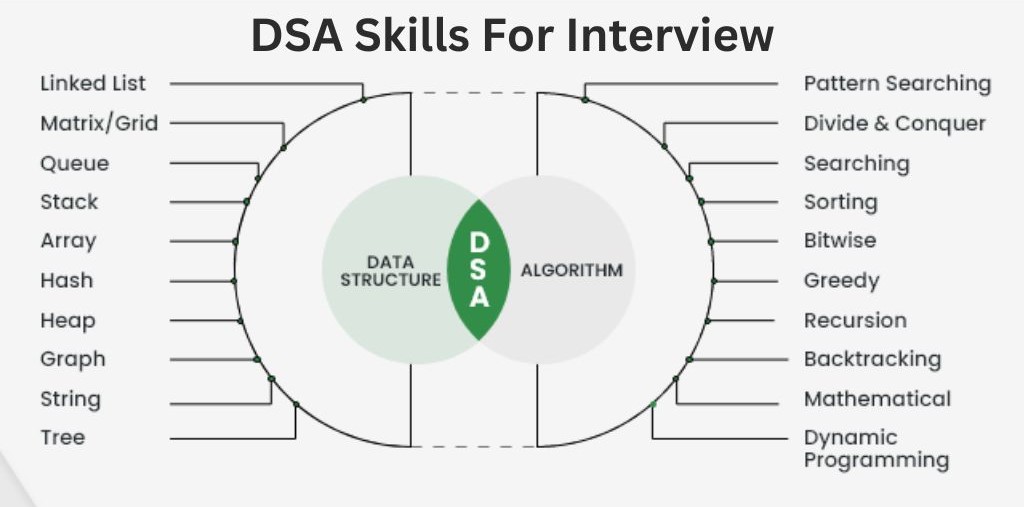

Many a times Computer Science graduates devalue the importance of learning data structures and algorithms considering it as complicated, irrelevant or a waste of time. However they soon get a reality check when they enter the real-world for job hunting. Big Companies like Amazon, Google, Microsoft often ask questions related to algorithms and data structures to check the problem-solving abilities of the candidates. DSA provides a simple approach to evaluate problem-solving skills, coding skills and clarity of thought of a candidate.

The knowledge of data structures and algorithms forms the basis for identifying programmers, giving yet another reason for tech enthusiasts to get upscaled as well as upskilled.

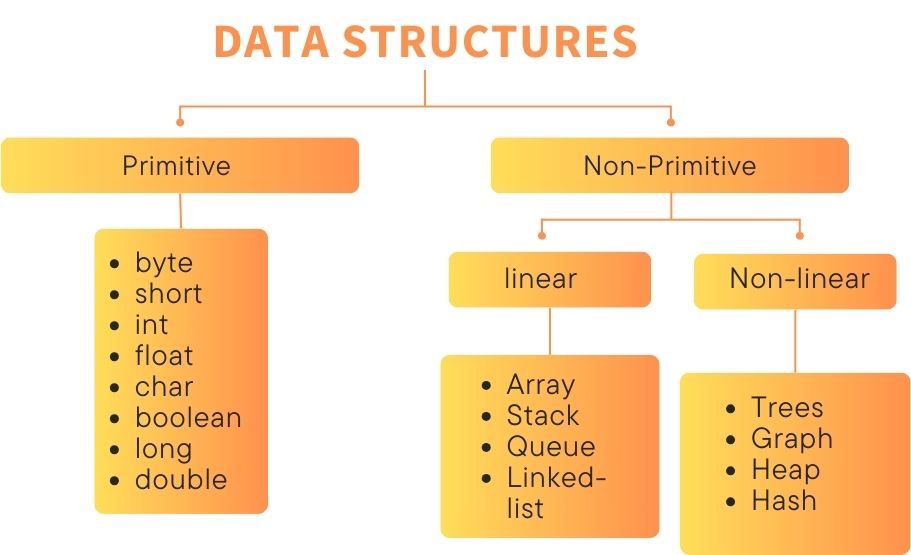

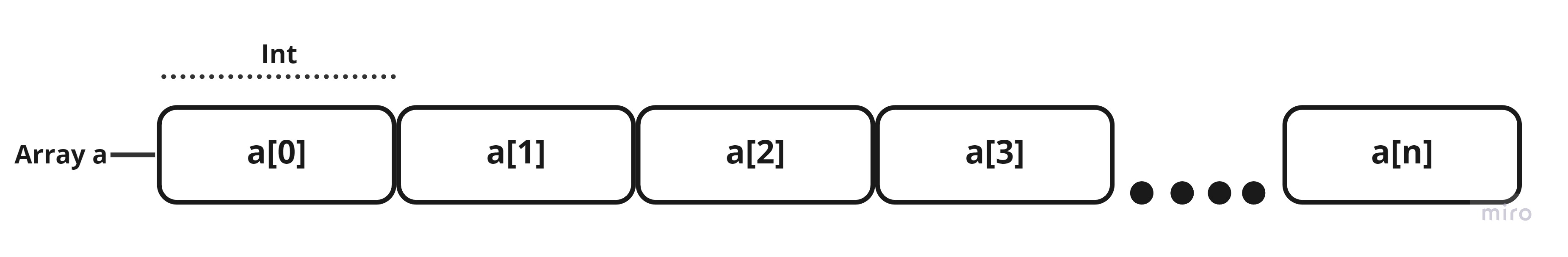

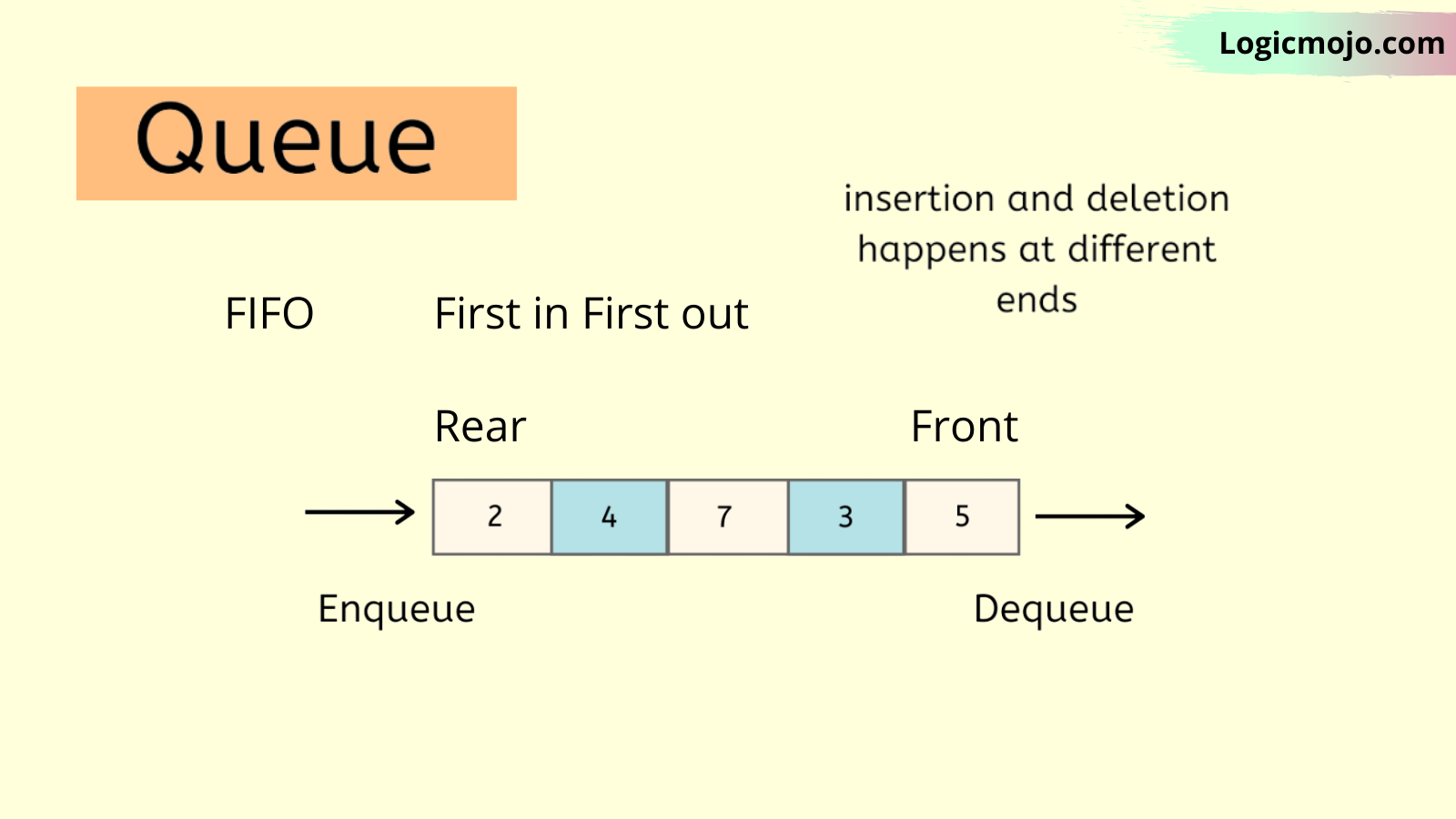

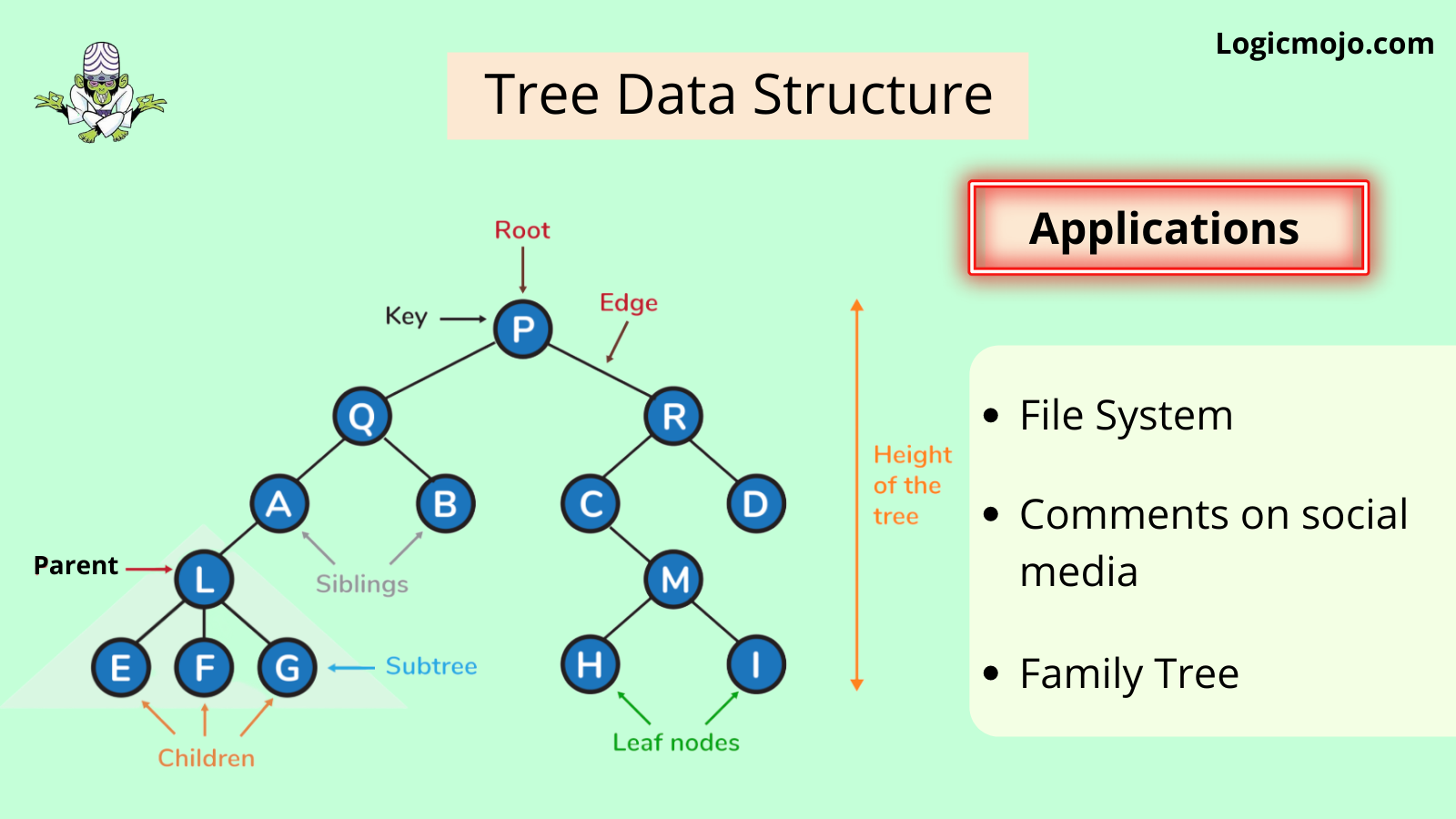

There are both simple and complex data structures; they are designed to organize data in a way that makes it useful for a certain function. Fundamentally, data structures aim to provide information in a way that is understandable and accessible to both machines and people.