Big Data Analytics

Back to home

- Introduction

- What is a Big Data Analytics?

- Why is Big Data Analytics Important?

- What is Big Data?

- Big data analytics uses and examples

- History and evolution of Big Data Analytics

- How Big Data Analytics Works?

- Key types of Big Data Analytics

- Benefits & Advantages of Big Data Analytics

- Tools and Technology used in big data analytics

- Real-life Big Data analytics

- Big data analytics challenges

- Future Trends in Big Data Analytics

- Ethical Considerations in Big Data Analytics

- Tips for Successful Big Data Analytics Projects

- Conclusion

𝑻𝒂𝒃𝒍𝒆 𝒐𝒇 𝑪𝒐𝒏𝒕𝒆𝒏𝒕

Logicmojo - Updated Jan 2, 2025

Logicmojo - Updated Jan 2, 2025

Introduction

Big Data is getting a lot of attention for good reason. However, the essence of Big Data and methods for analyzing it remain hazy. The truth is that this term refers to more than just the volume of data generated. Big Data refers not only to the massive amounts of continuously rising data in many formats, but also to the variety of methods, tools, and approaches utilized to extract insights from that data. The most crucial aspect is that Big Data analytics assists businesses in dealing with business difficulties that traditional methodologies and instruments cannot solve.

Big data analytics is responsible for some of the biggest industry breakthroughs in the world today, whether they are applied in the government, banking, healthcare, or other sectors.

Big data analytics is the use of advanced analytics to enormous collections of both structured and unstructured data in order to generate important insights for enterprises. It is widely utilized in a variety of industries, including health care, education, insurance, artificial intelligence, retail, and manufacturing, to learn what works and what doesn't in order to improve processes, systems, and profitability.

As businesses and organizations continue to move to digital operations, the importance of digital signatures in protecting information and building confidence in the digital sphere is expected to grow.

This article explores the Big world of Big Data Analytics, its significance, applications, challenges, and future prospects.

What is a Big Data Analytics?

Big Data analytics is a technique used to uncover important insights such unobserved correlations, hidden patterns, market trends, and consumer preferences. Big Data analytics offers a number of benefits, including the ability to use it to improve decision-making and stop fraud.

Companies may create plans swiftly and efficiently with this information, maintaining their competitive advantage. Businesses can use business intelligence (BI) tools and systems to collect structured and unstructured data from a variety of sources. Input from users (usually employees) into these tools helps to understand corporate performance and operations. The four data analysis techniques used by big data analytics are used to find significant insights and develop solutions.

As data engineers explore for ways to combine the enormous volumes of complex information produced by sensors, networks, transactions, smart devices, web usage, and more, this discipline continues to develop. To find and scale more sophisticated insights, big data analytics techniques are still being employed in conjunction with cutting-edge technology like machine learning.

Why is Big Data Analytics Important?

Although big data analytics may appear straightforward, it actually consists of several different procedures. Big data is something that has a tremendous amount of volume, velocity, and variety. Large data volumes can be analyzed using big data analytics techniques to produce insightful business information. Big data analytics are necessary because we are producing data at an incredibly fast rate and because every organization has to understand this data. The most recent estimates indicate that 328.77 million terabytes of data are produced daily.

Organizations may harness their data and use big data analytics to find new opportunities. This results in wiser company decisions, more effective operations, greater profitability, and happier clients. Businesses that combine big data with sophisticated analytics benefit in a variety of ways, including:

Reducing cost: When it comes to storing vast amounts of data (for instance, a data lake), big data technologies like cloud-based analytics can drastically lower expenses. Additionally, big data analytics assists businesses in finding ways to operate more effectively.

Making faster, better decisions: Businesses can quickly evaluate information and make quick, educated decisions because to in-memory analytics' speed and the capacity to examine new sources of data, such as streaming data from IoT.

Developing and marketing new products and services: Businesses may give customers what they want, when they want it by using analytics to determine their demands and level of satisfaction. Big data analytics gives more businesses the chance to create cutting-edge new goods that cater to the shifting wants of their clients.

What is Big Data?

Big Data is a term used to describe vast collections of various types of data that are continuously produced at a rapid rate and in big volumes, including structured, unstructured, and semi-structured data. Today, a rising number of businesses use this data to gain insightful understanding and enhance their decision-making, but they are unable to store and analyze it using conventional data storage and processing devices.

There are currently millions of data sources that provide data at an incredibly fast rate. These data sources are accessible on a global scale. Social media networks and platforms are some of the biggest providers of data. As an example, consider Facebook, which daily produces more than 500 gigabytes of data. Images, movies, texts, and other types of data are included here.

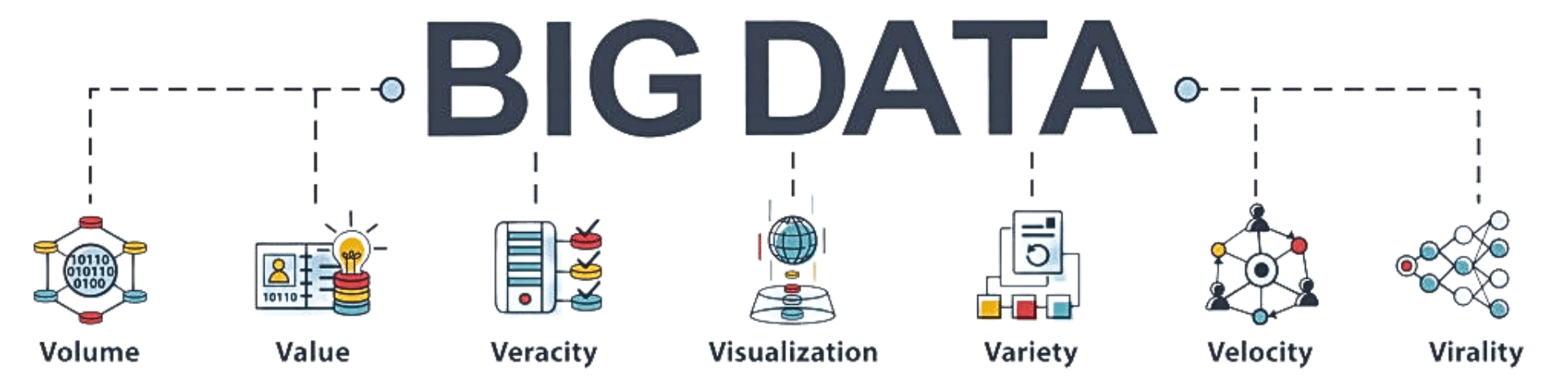

Characteristics of Big Data

Big data projects can be exceedingly difficult conceptually for businesses because they frequently fail. The four Vs serve as a primary definition of big data characteristics. The four Vs of big data analytics are as follows:

Volume:

What makes "Big Data" "big" is volume. Terabytes to petabytes of data from various sources, including Internet of Things (IoT) devices, social media, text files, corporate transactions, etc., are discussed here. To put things in perspective, 1 petabyte is the same as 1,000,000 gigabytes. While you are watching a single HD movie on Netflix, more than 4 gigabytes are consumed. Imagine a storage space of 1 petabyte containing 250,000 movies. Additionally, there are millions and thousands of petabytes involved with big data, not just one.

Veracity:

Different systems' data input and processing lead to issues with data accuracy veracity. It can be difficult to tell which record is correct, for instance, if different entries include the same data but different dates and timestamps.

As an alternative, a system error may occur if we are unaware of incomplete data. Therefore, in order to address the veracity challenge, large data systems require concepts, methodologies, and tools.

Velocity:

The velocity of data is the rate at which it is generated and processed. Batch reporting, near real-time/real-time processing, and data streaming are all examples. The best-case scenario is when the rate at which data is generated matches the rate at which it is processed. Take, for example, the transportation industry. A single car connected to the Internet and equipped with a telematics device creates and transmits 25 terabytes of data per hour at a near-constant rate. And the majority of this data must be processed in real-time or near real-time.

Variety:

In addition to the various source systems, data that was previously unlogged and overruled can be saved in big data scenarios. The data is similar to record updates and history modifications, and it can enable new use cases, such as time-series analytics, that would be difficult with old override data.

There are new data sources that produce massive amounts of data. Simpler versions contain data from social media or smartphone apps that provide new insights into client interactions. Such data can range from unstructured social media text data to organized operational business system data. It has the ability to analyze computable financial time-series data, time-series commit logs, app usage, and semi-structured customer interaction data.

Knowing the main characteristics, you can see that not all data can be called Big Data.

Aside from the sheer volume of data, the complexity of the data being collected poses issues in the configuration of data architectures, data management, integration, and analysis. However, firms that combine unstructured data sources such as social media material, video, or operations logs with existing structured data such as transactions can add context and provide new, and often richer, insights for improved business results.

Big data analytics uses and examples

Big Data analytics can be applied in a variety of ways to enhance businesses and organizations. Here are a few example:

• Understanding customer behavior with analytics to improve the customer experience,

• forecasting future trends to aid in better corporate decision-making,

• Understanding what works and what doesn't in marketing strategies to improve them,

• Increasing operational effectiveness by identifying and eliminating bottlenecks,

• earlier detection of fraud and other forms of abuse,

These are but a few examples; when it comes to big data analytics, the options are almost limitless. It all depends on how you want to use it to advance your company.

History and evolution of Big Data Analytics

• Big data has actually been around for quite some time. Businesses were employing some sort of basic analytics in the 1950s, before anybody had heard of the term big data, consisting of numbers in a spreadsheet that had to be manually analyzed to identify insights and trends.

• Big Data analytics may be traced back to the early days of computing, when businesses began employing computers to store and analyze massive volumes of data. However, it wasn't until the late 1990s and early 2000s that Big Data analytics really took off, as businesses increasingly resorted to computers to help them make sense of the constantly rising volumes of data created by their operations.

• Big data, the increase of organized and unstructured data, has fundamentally revolutionized the function of business intelligence (BI) by translating data into action and bringing value to the organization. While big data analytics has enhanced potential to unearth useful insights across the business, it has also introduced new problems in information capture, storage, and access.

• Because of the exponential expansion in the amount, diversity, and velocity of data creation and change in the era of big data analytics, BI difficulties have grown. This trend has increased the demands on data storage and analytics tools, bringing new issues for enterprises. However, it also opens up new avenues for leveraging big data analytics for competitive advantage.

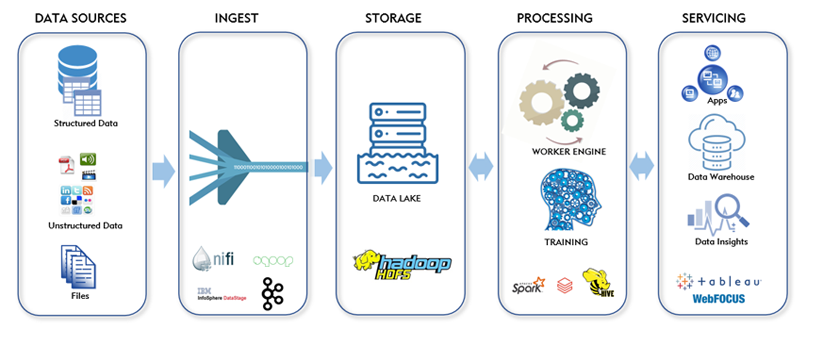

How Big Data Analytics Works?

Big Data Analytics is a powerful process that helps organizations extract valuable insights from vast amounts of data. This article explains the workings of Big Data Analytics in a simple and professional manner, making it easier for readers to understand.

At its core, Big Data Analytics involves several stages that work together to make sense of large and complex data sets:

1. Data Collection:

In the first stage, Data experts assemble data from a wide range of sources. Frequently, semistructured and unstructured data are combined. Although each business will employ several data streams, such as

• social media platforms,

• internet clickstream data,

• cloud & mobile applications,

• sensors with IoT,

• customer interactions, and more.

These sources produce enormous volumes of data, and collecting them is the initial step in the Big Data Analytics process.

2. Data Storage & Processing:

After gathering the data, it is stored in specialized repositories known as data warehouses or data lakes. These storage systems are designed to hold massive amounts of data and provide easy access for analysis later on. The groups of processing engines known as compute layers are utilized to carry out any computations on data. Additionally, there are client layers where all data management operations take place.

With data stored, the next step is processing. This involves using various tools and algorithms to prepare the data for analysis. During this stage, raw data is cleaned, transformed, and organized in a way that ensures it is ready for further examination.

Data processing is becoming more difficult for corporations as the amount of data available increases exponentially. Batch processing, which examines big data chunks over time, is one processing choice. When there is a longer gap between data collection and analysis, batch processing is advantageous. Small batches of data are examined all at once using stream processing, which reduces the time between data collection and analysis to enable quicker decision-making. Stream processing is more expensive and complex.

3. Data Cleaning:

Data, no matter how tiny or large, must first be completely cleaned in order to assure the highest quality and most accurate findings. Simply said, the data cleansing process entails checking the data for errors, duplicates, inconsistencies, redundancies, incorrect formats, etc. This verifies the data's usability and relevance for analytics. The removal or consideration of any inaccurate or irrelevant data is required. A variety of data quality technologies can identify and fix dataset issues.

4. Data Analysis:

Now comes the heart of Big Data Analytics. In this stage, statistical and machine learning techniques are applied to the prepared data. These techniques help identify patterns, trends, and meaningful insights hidden within the data. By analyzing the data, businesses can gain a deeper understanding of their customers, market trends, and operational efficiency. Advanced analytics procedures can transform huge data into big insights once it is ready. Among these large data analysis approaches are:

• Data mining searches vast datasets for patterns and relationships by detecting anomalies and forming data clusters.

• Natural language processing(NLP) is the field of computer science that enables computers to comprehend and act upon spoken or written human language.

• Predictive analytics makes forecasts about the future based on an organization's historical data, detecting upcoming dangers and opportunities.

• Deep learning resembles human learning patterns by layering algorithms and finding patterns in the most complicated and abstract data utilizing artificial intelligence and machine learning.

5. Data Visualization:

Big Data analysts can create graphic visualizations of their analysis using tools such as Tableau, Power BI, and QlikView. Analyzing data can be complex, especially when dealing with large datasets. Data visualization comes to the rescue here. It involves presenting the analyzed data in a visual format, such as charts, graphs, or maps. Visualizing data makes it easier for decision-makers to grasp the information quickly and make well-informed choices.

To sum it up, Big Data Analytics works by collecting vast amounts of data, storing them in specialized repositories, processing the data to make it analysis-ready, analyzing the data using statistical and machine learning techniques, and finally, presenting the results visually for better understanding.

By following these stages, organizations can unlock the potential of their data, enabling them to make data-driven decisions, enhance customer experiences, optimize processes, and gain a competitive edge in today's data-driven world.

Key types of Big Data Analytics

Big data analytics come in four primary categories and are used to assist and inform various business choices.

1. Descriptive Analytics:

This presents historical data in an accessible format. This facilitates the creation of reports on a company's earnings, profits, sales, and other metrics. Additionally, it assists in compiling social media stats.

The Dow Chemical Company looked at historical data to optimize the use of its lab and office space. Dow discovered underused space by using descriptive analytics. The corporation was able to save around $4 million USD yearly because to this space consolidation.

2. Predictive Analytics:

The goal of predictive analytics is to create predictions using both historical and current data. Data mining, artificial intelligence (AI), and machine learning enable users to evaluate the information to forecast market trends.

Companies in the manufacturing industry can forecast whether or when a piece of equipment will malfunction or break down using algorithms based on historical data.

3. Prescriptive Analytics:

Prescriptive analytics uses AI and machine learning to collect data and utilize it for risk management, offering a solution to a problem. In order to prescribe a certain analytical path for an organisation, this sort of analytics discusses an analysis that is based on rules and suggestions.

Utility firms, gas producers, and pipeline owners within the energy industry evaluate factors that impact the price of oil and gas in order to mitigate risks.

4. Diagnostic Analytics:

To comprehend the root cause of a problem, this is done. Drill-down, data mining, and data recovery are a few examples of techniques. Organizations utilize diagnostic analytics because they offer a thorough understanding of a specific issue.

Despite users adding items to their baskets, an e-commerce company's data reveals a decline in revenue. This could be because there aren't enough payment alternatives, the shipping cost is too high, or the form didn't load properly, among other things. Here, diagnostic analytics can be used to identify the cause.

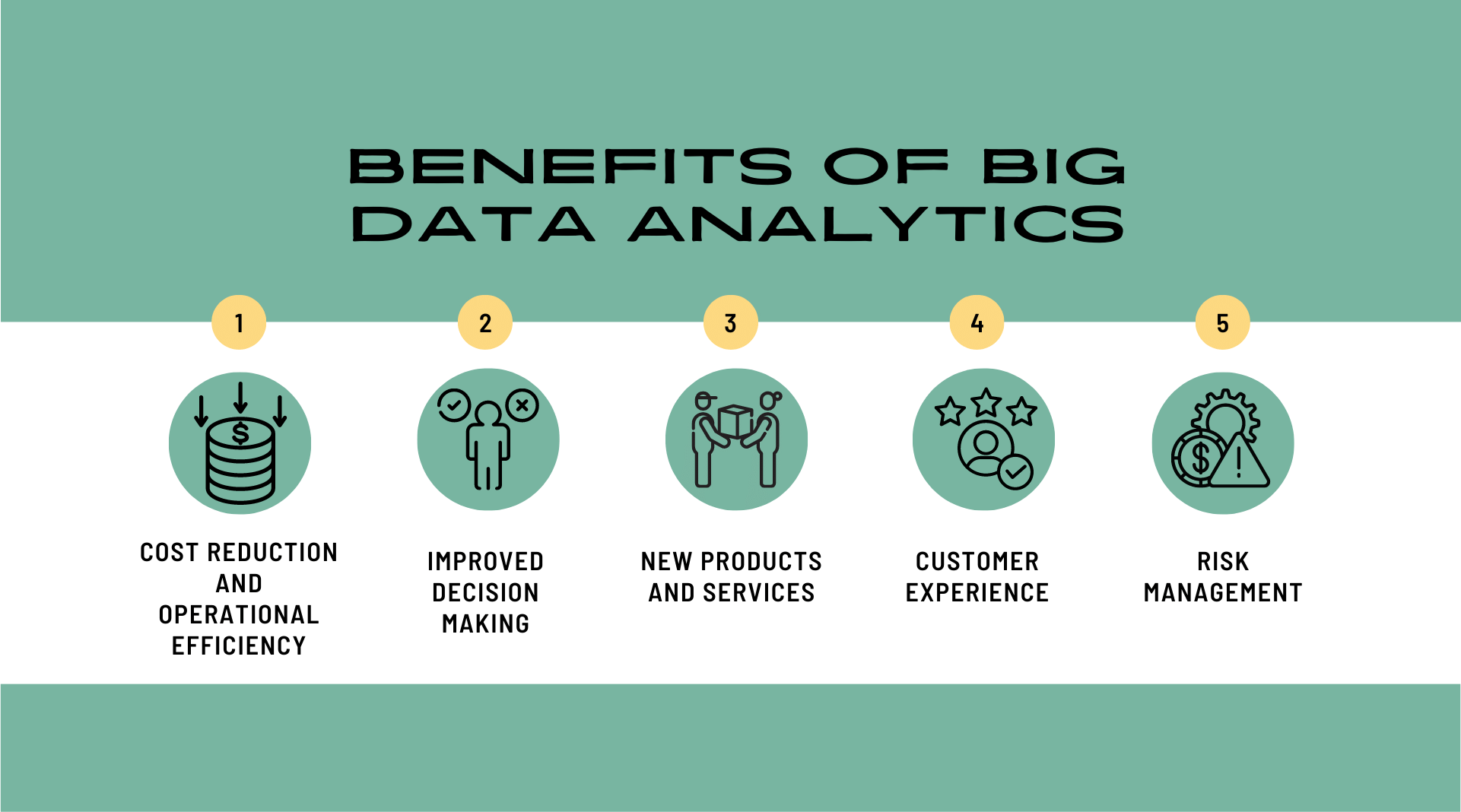

Benefits & Advantages of Big Data Analytics

Cost reduction and operational efficiency: Organizations can save money by using adaptable data processing and storage systems to store and analyze massive amounts of data. Find trends and insights that will help you run your business more effectively. Companies can cut costs by using Apache Hadoop, Spark, and Hive as well as other cloud computing and storage technologies like Amazon Web Services (AWS) and Microsoft Azure to store and process vast amounts of data.

Improved decision making: Businesses can swiftly evaluate new data sources and generate the fast, actionable insights required to make choices in real time thanks to the speed of Spark and in-memory analytics. To get fresh insights and make decisions, businesses can access a sizable volume of data and analyze it from a wide range of sources. Start off modestly and expand as needed to handle data from both historical records and current sources.

New products and services: Companies may more accurately analyze client needs with the aid of big data analytics tools, making it simpler to provide customers with the goods and services they desire. Based on data gathered from client needs and wants, creating and marketing new products, services, or brands is considerably simpler. Additionally, big data analytics aids in understanding product viability and trend monitoring for enterprises.

Customer experience: Data-driven algorithms play a pivotal role in enhancing customer experiences. By analyzing vast amounts of customer data, businesses can gain valuable insights into customer preferences, behaviors, and buying patterns. This information allows them to create targeted marketing efforts, such as personalized ads and recommendations, which resonate with individual customers.

Risk management: Big Data Analytics empowers businesses to identify potential risks and vulnerabilities within their operations. By analyzing data patterns, companies can detect early warning signs of potential disruptions, financial fraud, or cyber threats. Armed with this information, they can proactively develop risk mitigation strategies to safeguard their assets, reputation, and stakeholders' interests.

Tools and Technology used in big data analytics

The field of big data analytics is too broad to be confined to a single tool or technology. Instead, a variety of tools are combined to assist with the collection, processing, cleaning, and analysis of big data. The following list includes some of the key participants in big data ecosystems.

Hadoop :

Hadoop is a widely used open-source framework that enables the distributed processing of large and complex data sets. It is designed to handle massive volumes of data by dividing it into smaller chunks and distributing the processing across multiple nodes in a cluster. The key components of Hadoop are the Hadoop Distributed File System (HDFS) for data storage and the MapReduce programming model for data processing.

Spark:

Spark is another essential tool in the Big Data Analytics ecosystem. It is a lightning-fast data processing engine that significantly enhances analytics performance. Spark is designed to operate in-memory, reducing the need to read and write data from and to disk. This in-memory processing capability enables real-time data analysis and iterative algorithms, making it well-suited for machine learning and large-scale data processing tasks.

Tableau:

A complete data analytics tool called Tableau enables you to prepare, analyze, work together, and share your big data findings. Tableau excels at self-service visual analysis, enabling users to explore governed big data and quickly share their findings with others in the company.

MapReduce :

The Hadoop framework's core MapReduce component performs two tasks. The first is mapping, which distributes data to different cluster nodes. The second method is reduction, which groups and condenses each node's results in order to respond to a query.

NoSQL databases:

Non-relational or non-tabular databases, commonly referred to as NoSQL databases, use a variety of data models to access and manage data. "NoSQL" stands for "Non-SQL" and "Not Only SQL" in this sentence. NoSQL databases employ many techniques to store data as opposed to conventional relational databases, which organize all data into tables and depend on the SQL (Structured Query Language) syntax. There may be options for storing data in the form of columns, documents, key-value pairs, and graphs depending on the type of database. NoSQL databases are ideal for large amounts of unstructured, raw data and heavy user loads due to their flexible schemas and excellent scalability.

Machine Learning Algorithms:

Machine learning algorithms are an integral part of Big Data Analytics, enabling predictive modeling and pattern recognition. These algorithms allow systems to learn from data patterns and make data-driven predictions without being explicitly programmed. Various machine learning techniques, such as supervised learning, unsupervised learning, and reinforcement learning, are used in Big Data Analytics to analyze data and make informed decisions.

Data Mining :

Data mining is a process that involves extracting valuable information and insights from large datasets. It encompasses various techniques, including statistical analysis, pattern recognition, and machine learning. Data mining helps uncover hidden patterns, relationships, and trends within the data, enabling businesses to make better-informed decisions.

Talend :

An open-source platform for data management and integration called Talend gives users access to assisted, self-service data preparation. One of the best and most user-friendly data integration platforms with an emphasis on big data is Talend.

Apache Kafka :

Big Data is gathered from various sources using the scalable, open-source, fault-tolerant software platform known as Kafka. The Apache Software Foundation created the platform primarily for processing information quickly and instantly. Kafka's event-driven architecture eliminates the requirement for the system to periodically check for new data because it can react to events as they happen in real-time. The complexity of Big Data becomes more manageable in this approach.

Predictive Analytics Hardware and Software: :

Predictive analytics is a powerful application of Big Data Analytics that involves using machine learning and algorithms to analyze large amounts of complex data and predict future outcomes. This technology is utilized across various industries to gain valuable insights and make data-driven decisions. One prominent example of predictive analytics in action is fraud detection in the financial sector. Banks and credit card companies analyze vast amounts of transaction data in real-time using sophisticated predictive models.

Stream Analytics Tools :

Stream analytics tools are essential for processing real-time data streams from diverse sources, especially when data is stored in various formats or platforms. These tools enable businesses to filter, aggregate, and analyze the data as it flows in, allowing for immediate insights and responses. One example of stream analytics in action is social media monitoring. Companies can track mentions, hashtags, and sentiment in real-time, helping them understand how customers perceive their brand and react promptly to customer feedback or crises.

Real-life Big Data analytics

Big Data Analytics has proven to be transformative in various industries, providing valuable insights and driving innovation. Let's explore some specific real-life examples of how Big Data Analytics is currently being used:

-

E-commerce:

In the world of online retail, Big Data Analytics plays a pivotal role. E-commerce giants like Amazon use data analytics to analyze customer behavior, purchase history, and browsing patterns. By understanding individual preferences, they can offer personalized product recommendations, optimize pricing strategies, and even forecast demand for various products. This level of personalization enhances the customer experience, increases sales, and fosters customer loyalty.

-

Healthcare:

Healthcare organizations leverage Big Data Analytics to improve patient care and outcomes. Electronic Health Records (EHRs) are analyzed to identify patterns and correlations in patient data, leading to better diagnoses and treatment plans. Moreover, data analytics aids in predicting disease outbreaks and monitoring public health trends. For instance, during the COVID-19 pandemic, data analytics played a crucial role in tracking infection rates, hospital capacities, and vaccine distribution.

-

Supply Chain Management:

Supply chain management heavily relies on Big Data Analytics to optimize operations. Companies use data analytics to track inventory levels, monitor logistics, and identify potential bottlenecks in the supply chain. By analyzing real-time data, businesses can make data-driven decisions to improve efficiency, reduce costs, and ensure timely delivery of products.

-

Financial Services:

The financial sector benefits immensely from Big Data Analytics. Banks and financial institutions use data analytics to detect fraudulent activities, such as credit card fraud and identity theft. Advanced analytics tools can identify abnormal transaction patterns, flagging suspicious activities for investigation. Additionally, data analytics helps assess risks in investments and lending, enabling institutions to make informed decisions. It also enables personalized customer service by analyzing customer data to understand their financial needs better.

-

Retail:

Retailers utilize Big Data Analytics to gain insights into customer behavior and shopping preferences. Loyalty programs and online shopping data provide valuable information about customers' purchasing habits. With this data, retailers can offer personalized promotions, discounts, and targeted advertising. Furthermore, data analytics helps optimize inventory management, ensuring the right products are available at the right time, reducing stockouts and excess inventory.

-

Energy:

In the energy sector, Big Data Analytics aids in exploring and extracting natural resources. Oil and gas companies analyze vast amounts of geospatial data, seismic data, and geological data to locate potential oil reserves accurately. This significantly enhances the efficiency of resource exploration and reduces the cost and environmental impact of drilling operations.

Big data analytics challenges

Big data offers enormous advantages, but it also presents enormous difficulties, including new privacy and security worries, user accessibility for business users, and selecting the best solutions for your company's requirements. Organizations must deal with the following issues in order to benefit from incoming data:

• Access to huge data: As data volume increases, collecting and analyzing it becomes more challenging. Data must be made accessible and useful for users of all skill levels by organizations.

• Maintaining high-quality data: Organizations are spending more time than ever before looking for duplicates, errors, absences, conflicts, and inconsistencies because there is so much data to handle.

• Data Security: Big data systems' complexity creates special security challenges. It might be challenging to properly manage security issues in a complex big data ecosystem.

• Choosing the appropriate tools: Big data analytics platforms and tools come in a dizzying assortment, so it can be challenging for businesses to choose the one that best suits their users' requirements and infrastructure.

• Skill gap between supply and demand: Businesses are struggling to fulfill the demand for trained big data analytics specialists due to a dearth of data analytics capabilities as well as the high cost of recruiting experienced employees.

Future Trends in Big Data Analytics

As technology continues to progress, Big Data Analytics is set to experience significant transformations, shaping the way businesses analyze and leverage data. Some of the key future trends in Big Data Analytics include:

1. Edge Computing: Edge computing is an emerging trend that involves processing data closer to the source or the edge of the network, rather than sending all data to a centralized cloud or data center. This approach reduces latency and bandwidth usage, as only relevant data is transmitted, making it ideal for real-time data analysis.

2. AI and Machine Learning Integration: The integration of artificial intelligence (AI) and machine learning (ML) into Big Data Analytics is a game-changer. AI-driven automation enhances data analysis processes, making them faster and more accurate. AI algorithms can autonomously identify patterns, correlations, and anomalies within large datasets, enabling organizations to extract meaningful insights and predictions.

3. Predictive and Prescriptive Analytics: Traditionally, Big Data Analytics has focused on descriptive analytics, which involves analyzing historical data to understand past events and trends. However, the future of Big Data Analytics lies in predictive and prescriptive analytics. Predictive analytics employs advanced algorithms to forecast future trends, behavior, and outcomes based on historical data patterns. Prescriptive analytics, on the other hand, takes predictive analysis a step further by suggesting optimal actions to achieve desired outcomes.

Ethical Considerations in Big Data Analytics

In the era of Big Data Analytics, organizations have access to vast amounts of data, enabling them to gain valuable insights and make data-driven decisions. Here are some essential ethical considerations in Big Data Analytics:

1. Data Privacy: Data privacy is a fundamental ethical concern in Big Data Analytics. As organizations collect and analyze data, they must respect the privacy rights of individuals whose data is being used. This includes ensuring that data is collected and used with the explicit consent of the individuals involved.

2. Bias and Fairness: Bias in data collection and analysis can lead to unfair treatment and discriminatory outcomes. It is essential for organizations to be vigilant and mitigate biases that may exist in the data or the algorithms used for analysis. Biases can occur at various stages, from data collection, where certain groups may be underrepresented, to algorithm design, which might inadvertently perpetuate biases.

3. Transparency: Transparency is a key aspect of ethical Big Data Analytics. Organizations must be open and transparent about their data usage practices and data-driven decisions. This includes providing clear explanations for the basis of any decisions made using data analysis. Transparency builds trust with customers and stakeholders, as they can better understand how their data is being used and the implications of data-driven decisions on their lives.

Tips for Successful Big Data Analytics Projects

To ensure successful Big Data Analytics projects, consider the following tips:

Clearly Define Objectives: Identify the goals you want to achieve through data analysis.

Invest in Infrastructure: Build a robust and scalable data infrastructure.

Data Quality Assurance: Ensure data accuracy, completeness, and reliability.

Continuous Learning: Stay updated with the latest trends and technologies in Big Data Analytics.

Conclusion

In the end, Big Data Analytics is a transformative force that continues to shape the future of business. By embracing this powerful tool, organizations can unlock the full potential of their data, drive innovation, and make strategic decisions that propel them toward success in the dynamic and competitive digital landscape. As technology evolves, the possibilities are limitless, and those who seize the opportunities presented by Big Data Analytics will undoubtedly flourish in the exciting times ahead.

Good Luck & Happy Learning!!

Frequently Asked Questions (FAQs)

The 5 V's of big data - velocity, volume, value, variety, and veracity - are the inherent characteristics that define big data. Understanding these V's is essential for data scientists and organizations to extract maximum value from their data and enhance customer-centricity.

1. Velocity

Velocity refers to the speed at which data is generated, collected, and processed. With the advent of the Internet of Things (IoT), social media, and other real-time data sources, data is now produced at an unprecedented rate. Organizations must handle and analyze this fast-paced data flow efficiently to make timely decisions and seize opportunities.

2. Volume

Volume pertains to the sheer scale of data generated and stored. Big data involves massive amounts of data that exceed traditional data management systems' capabilities. Handling such large volumes requires specialized tools and technologies, like Hadoop and data warehouses, for effective storage and analysis.

3. Value

Value signifies the significance of the data to the organization. Extracting valuable insights from big data is crucial to making informed decisions and gaining a competitive advantage. By identifying patterns and trends, organizations can derive actionable intelligence that enhances business operations and customer experiences.

4. Variety

Variety refers to the diverse types and formats of data sources. Big data encompasses structured, semi-structured, and unstructured data, including text, images, videos, and sensor data. Managing and integrating such diverse data sources pose challenges but also open up new opportunities for analysis and innovation.

5. Veracity

Veracity relates to the reliability and accuracy of the data. Big data can often be messy and contain inconsistencies, errors, or biases. Ensuring data quality and establishing data credibility are crucial steps in making sound data-driven decisions and building trust in data analysis outcomes.

By understanding and harnessing the 5 V's of big data, data scientists and organizations can unlock the full potential of their data, optimize decision-making, and achieve a customer-centric approach that drives business success.

Big data analytics refers to the process of extracting valuable insights and patterns from massive and diverse datasets. It enables organizations to make data-driven decisions, derive meaningful conclusions, and uncover hidden trends that would be difficult to discern through traditional data analysis methods. Here are some main examples of big data analytics:

- Real-time Analytics: Utilizing big data analytics, businesses can process and analyze data in real-time, allowing them to respond swiftly to emerging trends and make instant decisions to optimize operations and customer experiences.

- Predictive Analytics: Big data analytics can be used to develop predictive models that forecast future trends, customer behavior, and market shifts. This empowers organizations to proactively strategize and anticipate changing demands.

- Social Media Analytics: Analyzing vast amounts of social media data helps businesses understand customer sentiment, preferences, and feedback, enabling them to tailor marketing campaigns and improve brand reputation.

- Recommendation Systems: Big data analytics drives recommendation engines that suggest products, services, or content to users based on their past behavior and preferences, enhancing customer engagement and retention.

On the other hand, big data encompasses diverse sources that generate vast volumes of data. Some main examples of big data sources include:

- Transaction Processing Systems: These systems record large-scale transactional data from various business operations, providing valuable insights into sales, inventory, and financial performance.

- Customer Databases: Collecting and analyzing customer data enables businesses to personalize offerings, improve customer service, and strengthen customer loyalty.

- Internet Clickstream Logs: These logs capture user interactions with websites, helping organizations understand user behavior, preferences, and website performance.

- Medical Records: Big data in the healthcare sector includes electronic health records and medical imaging data, enabling medical research, diagnostics, and personalized treatment plans.

- Mobile Apps: Mobile apps generate vast amounts of data on user interactions, helping app developers optimize user experiences and enhance app performance.

- Social Networks: Social media platforms generate immense data on user interactions, content sharing, and social trends, which can be harnessed for market research and social listening.

Big Data:

Big data is characterized by an extensive volume of data that is continuously growing at a rapid pace over time. It refers to the vast and diverse datasets that exceed the capacity of traditional data processing systems. Big data encompasses structured, semi-structured, and unstructured data from various sources, such as social media, sensors, transactions, and more. The key attributes of big data are known as the "Three Vs": Volume (large data quantities), Velocity (high data processing speed), and Variety (data from different sources and formats). Managing big data requires specialized infrastructure and technologies to store, process, and analyze the vast datasets effectively.

Big Data Analytics:

Big data analytics, on the other hand, is the process of extracting valuable insights and patterns from the large and complex datasets that constitute big data. It involves the application of various analytical techniques, statistical algorithms, and machine learning models to analyze and interpret the raw data. The primary objective of big data analytics is to derive meaningful conclusions, trends, and correlations from the data, which can aid in making data-driven decisions. The process includes data exploration, data cleansing, data transformation, and the development of predictive models. Big data analytics enables organizations to uncover hidden patterns, forecast trends, improve operational efficiency, optimize marketing strategies, enhance customer experiences, and gain a competitive edge in the marketplace.

Big data analytics encompasses a diverse range of analytical approaches that help organizations make sense of massive and complex datasets. The main types of big data analytics are:

- Diagnostic Analytics: Diagnostic analytics focuses on examining historical data to understand why certain events or outcomes occurred. It involves identifying patterns, trends, and correlations within the data to gain insights into past performance and events. By analyzing historical data, organizations can diagnose the root causes of specific occurrences, enabling them to make informed decisions to address and prevent similar situations in the future.

- Descriptive Analytics: Descriptive analytics involves summarizing and visualizing data to provide a clear and concise overview of past events and trends. It helps organizations understand what has happened in the past, allowing them to assess performance and identify areas for improvement. Descriptive analytics provides valuable metrics and key performance indicators (KPIs) that aid in monitoring business operations and performance.

- Prescriptive Analytics: Prescriptive analytics goes beyond descriptive and diagnostic approaches by recommending actions and strategies to optimize future outcomes. By using advanced algorithms and simulations, prescriptive analytics assesses multiple possible scenarios to identify the best course of action. This type of analytics helps organizations make data-driven decisions and devise strategies that maximize efficiency and success.

- Predictive Analytics: Predictive analytics leverages historical data and statistical algorithms to forecast future trends and outcomes. By analyzing patterns and relationships within the data, predictive analytics enables organizations to anticipate customer behavior, market trends, and potential risks. This proactive approach empowers businesses to take preemptive actions and stay ahead of the competition.

Big data can be categorized into three main types based on the format and organization of the data:

- Structured Data:

- Unstructured Data:

- Semi-Structured Data:

Structured data refers to data that is well-organized and stored in a fixed format. It is highly organized with a predefined schema, making it easily searchable and analyzable. Structured data is typically found in relational databases and consists of rows and columns. Examples include transactional data, customer information, sales records, and financial data. The structured nature of this data simplifies data processing and allows for efficient querying and analysis.

Unstructured data is data that lacks a predefined structure and organization. It exists in raw and non-standardized formats, such as text files, images, videos, social media posts, and emails. Unstructured data is challenging to analyze using traditional methods due to its complexity and lack of predefined schema. However, it holds valuable insights and information that can be extracted through advanced analytics techniques, such as natural language processing (NLP) and machine learning algorithms.

Semi-structured data falls between structured and unstructured data. It contains some level of organization, typically in the form of tags or metadata, but does not adhere to a rigid schema. Examples of semi-structured data include XML files, JSON data, and log files. While it provides some organization, semi-structured data still requires specialized techniques to process and analyze effectively.

Big data analytics plays a crucial role in modern-day organizations, enabling them to harness vast and diverse datasets to gain valuable insights and drive strategic decision-making. Some key applications of big data analytics are:

- Identifying Opportunities: Big data analytics helps organizations identify potential opportunities hidden within massive datasets. By analyzing customer behavior, market trends, and industry patterns, businesses can discover new avenues for growth, innovation, and market expansion.

- Understanding Customer Behavior: Analyzing customer data allows organizations to understand customer preferences, needs, and pain points. This knowledge enables businesses to personalize products and services, enhance customer experiences, and build stronger customer relationships.

- Optimizing Operations: Big data analytics aids in optimizing operational processes and workflows. By analyzing operational data, businesses can identify bottlenecks, inefficiencies, and areas for improvement, leading to enhanced productivity and cost reduction.

- Forecasting and Predictive Modeling: Big data analytics facilitates predictive modeling, enabling organizations to forecast future trends, demand patterns, and market shifts. This proactive approach empowers businesses to make data-driven decisions and stay ahead of competition.

- Risk Management: Organizations can use big data analytics to assess and mitigate potential risks. Analyzing historical data and market trends helps in identifying potential risks and developing strategies to mitigate their impact.

- Improving Decision-Making: By providing data-driven insights, big data analytics empowers organizations to make informed and timely decisions. This leads to more accurate planning, resource allocation, and strategic initiatives.

- Enhancing Product Development: Analyzing customer feedback and product usage data assists in refining existing products and developing new offerings that align with customer needs and preferences.

Big data plays a crucial role in the field of Artificial Intelligence (AI), providing the vast and diverse datasets necessary to train and enhance AI models. Here are the main points regarding the significance of big data in AI:

Data-Driven Learning:

Big data serves as the fuel for AI algorithms and machine learning models. AI systems require large and diverse datasets to learn patterns, identify correlations, and make accurate predictions. The more data AI models have access to, the more refined and effective their decision-making capabilities become.

Enhancing AI Performance:

With big data, AI models can continuously learn and improve over time. As more data is fed into the AI system, it gains more knowledge and becomes better at recognizing patterns, adapting to changes, and delivering accurate results.

Real-Time Insights:

Big data allows AI systems to process and analyze vast amounts of data in real-time. This enables AI to provide instant insights and responses, crucial for applications in industries such as finance, healthcare, and cybersecurity.

Natural Language Processing (NLP):

For AI to understand and process human language effectively, it requires extensive language data. Big data supports NLP algorithms, enabling AI to comprehend, interpret, and respond to human speech and text accurately.

Computer Vision:

Computer vision algorithms in AI heavily rely on big data, particularly image and video datasets. The availability of large image datasets facilitates the training of AI models for tasks like object detection, facial recognition, and image segmentation.

Personalization and Recommendation Systems:

Big data empowers AI-driven personalization and recommendation systems, tailoring content, products, and services to individual user preferences. By analyzing vast user behavior data, AI can deliver personalized experiences, enhancing customer satisfaction and engagement.

Successful implementation of big data analytics demands a combination of technical expertise and soft skills. The main points regarding the requirements for big data analytics are:

Programming Knowledge:

Proficiency in programming languages like Python, R, Java, or SQL is crucial for big data analysts. They use programming to manipulate and analyze large datasets, develop algorithms, and build predictive models.

Quantitative and Data Interpretation Skills:

Big data analysts must possess strong quantitative skills to analyze data and draw meaningful insights from complex datasets. They should be adept at handling statistical analyses and applying data interpretation techniques.

Technological Expertise:

Big data analysts must be well-versed in various technologies and tools related to data storage, processing, and analysis. Familiarity with technologies like Hadoop, Spark, and data visualization tools is essential for effective data analysis.

Communication Skills:

Effective communication, both oral and written, is vital for big data analysts. They need to communicate their findings and insights to stakeholders and team members in a clear and concise manner.

Data Mining and Auditing Skills:

Being skilled in data mining techniques allows big data analysts to extract valuable patterns and trends from large datasets. Additionally, they should possess auditing skills to ensure data accuracy and quality.

Problem-Solving and Analytical Thinking:

Big data analysts must have a strong problem-solving mindset and analytical thinking abilities to tackle complex data-related challenges and devise innovative solutions.

Big Data and SQL databases are not the same; they serve different purposes and have distinct characteristics. Here are the main points of differentiation between the two:

Database Structure:

Standard SQL databases are efficient for managing and processing structured data. They use a table-based schema to organize and store data in a well-defined manner. On the other hand, big data databases are designed to handle vast and diverse datasets, including structured, semi-structured, and unstructured data. They offer flexible and scalable storage solutions to accommodate the sheer volume and variety of data.

Data Processing:

SQL databases are ideal for handling structured data and are optimized for processing queries based on predefined schemas. They are best suited for transactional applications and performing complex joins and aggregations on structured data. In contrast, big data platforms, like Hadoop and Spark, are capable of parallel processing, making them highly efficient for handling large-scale data processing and analysis tasks.

Query Language:

SQL databases use Structured Query Language (SQL) to interact with and retrieve data from the database. SQL provides a standardized way to perform data manipulation, querying, and management. On the other hand, big data platforms may use various query languages and frameworks, depending on the technology used, such as HiveQL for Apache Hive or Spark SQL for Apache Spark.

Scalability:

Standard SQL databases are vertically scalable, which means they can be upgraded to handle increased workloads by adding more resources to a single server. In contrast, big data platforms are horizontally scalable, meaning they can distribute the data and processing across multiple nodes or servers to handle massive datasets and parallel processing.

Data Variety:

SQL databases are designed to handle structured data with a predefined schema, limiting their ability to efficiently manage unstructured and semi-structured data. Big data platforms excel at ingesting, processing, and analyzing diverse data formats, including text, images, videos, and social media data.